An Analysis of Feedback Protocols for Aligning LLMs

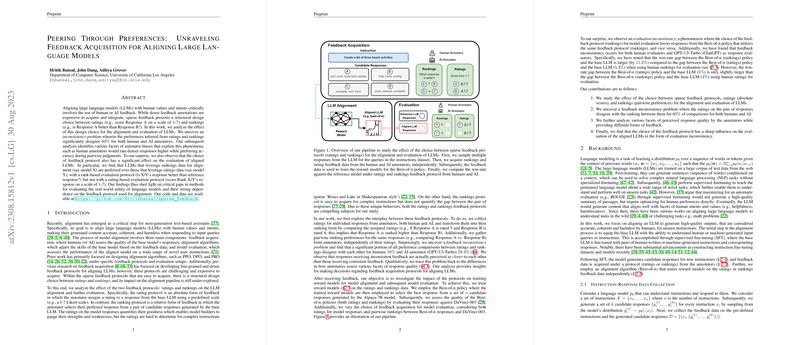

The research paper "Peering Through Preferences: Unraveling Feedback Acquisition for Aligning LLMs" presents a meticulous examination of feedback protocols—specifically ratings and rankings—used for aligning LLMs with human values and intents. The importance of this paper lies in the understanding that effective model alignment necessitates efficient feedback mechanisms, which are integral to shaping how models respond to human queries in relevant, accurate, and non-harmful ways. By exploring the comparison between ratings and rankings, this paper explores how feedback choices influence the alignment process and the subsequent evaluation of LLMs.

Inconsistency Problems and Annotator Bias

The core finding of the paper addresses the inconsistency in feedback data obtained from ratings and rankings, noting that these two forms significantly disagree in 60% of comparisons, both for human and AI annotators. This disagreement underscores a substantial challenge in aligning LLMs: each protocol taps into different aspects of human judgment, thus affecting subsequent model evaluations. Annotator biases further complicate this landscape; the paper highlights tendencies among annotators, such as the inclination to rate denser responses more favorably in isolation, yet prefer accuracy in comparative judgments. These biases manifest in varying results when responses are evaluated independently versus in a pairwise context.

Feedback Protocol Choice and Model Evaluation

The influence of feedback protocol choice is exemplified in the preference for models aligned with rankings data when the evaluation criterion is also rank-based, highlighting an evaluation inconsistency issue. This observation indicates that model preference can be largely swayed by the alignment of the feedback and evaluation protocols. Specifically, models aligned with rankings feedback show a higher win-rate against reference responses when evaluated using a similar protocol, while this isn't the case with rating-based evaluations, presenting a unique challenge for building universally effective evaluation standards.

Methodological Insights and Implications

This paper offers a wealth of insights into feedback acquisition protocols, emphasizing the critical nature of protocol choice on model alignment. Among the methods employed, the juxtaposition of sparse feedback protocols—quantifying response quality through point ratings versus relational rankings—sheds light on the nuanced ways these methods interpret and influence LLM outputs. The paper's approach of translating ratings into a ranking format for comparative analysis illustrates an original methodology for understanding the implications of protocol selection.

Practically, the identified inconsistency prompts the need for more refined, potentially hybrid feedback protocols that can bridge the gaps between disparate evaluations. The findings suggest that those developing LLMs need to be cautious about how feedback is amassed and interpreted, given its broader impact on model alignment and perceived quality.

Future Directions

Exploring the implications of these findings can forge pathways toward more holistic AI evaluation strategies. As AI systems are increasingly being deployed in diverse settings, it becomes imperative to establish standardized, robust evaluation methodologies that account for human-centric variables more comprehensively. Future research could aim to integrate richer feedback forms, incorporating not just scalar and ordinal measures, but also qualitative and context-sensitive data, to offer a multifaceted evaluation of model performance. Equally imperative is developing training and evaluation frameworks that remain consistent across protocol variations, ensuring model efficacy transcends feedback acquisition nuances.

In summary, this paper provides a valuable contribution to the understanding of how feedback protocols affect LLM alignment and evaluation, revealing the intricacies of feedback-induced model behavior and the resultant challenges in establishing objective evaluation metrics.