Towards a Unified View of Preference Learning for LLMs: A Survey

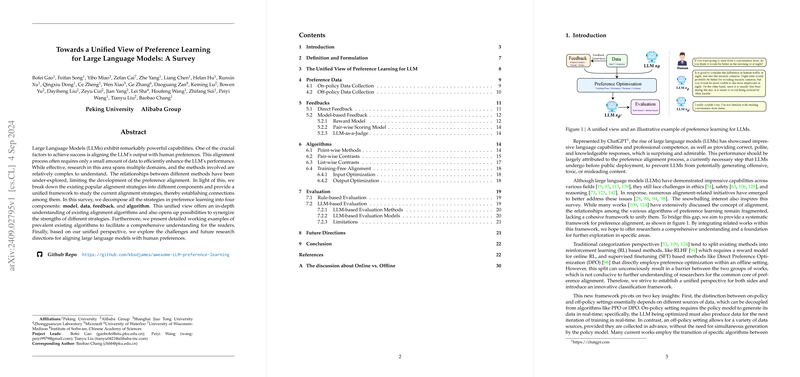

The paper "Towards a Unified View of Preference Learning for LLMs: A Survey" provides an extensive survey encompassing key methodologies and concepts in the domain of preference learning, specifically for aligning the outputs of LLMs with human preferences. This alignment is critical for ensuring that the responses generated by LLMs are useful, safe, and acceptable to users.

Overview and Structure

The authors argue that the existing strategies for preference learning are fragmented and lack a cohesive framework that connects various methodologies. This survey aims to bridge that gap by presenting a unified framework that breaks down the preference learning methods into four core components: Model, Data, Feedback, and Algorithm. The paper is structured as follows:

- Introduction: An overview of the importance of preference alignment in LLMs and the motivation for this survey.

- Definition and Formulation: Formal definitions and mathematical formulations of preference learning are provided.

- Unified View of Preference Learning: Introduction of a cohesive framework that categorizes the elements of preference learning.

- Preference Data: Detailed discussion on data collection methods, both on-policy and off-policy.

- Feedback: Examination of various kinds of feedback mechanisms, including direct and model-based feedback.

- Algorithms: Categorization and explanation of different optimization algorithms, including point-wise, pair-wise, list-wise, and training-free methods.

- Evaluation: Overview of evaluation strategies, including rule-based and LLM-based methods.

- Future Directions: Speculation on future advancements in the field.

Key Contributions

Data Collection

The paper highlights two main strategies for data collection:

- On-Policy Data Collection: This involves generating data samples in real-time from the policy LLM and obtaining rewards interactively. Techniques such as Top-K/Nucleus Sampling and Beam Search are discussed, and Monte Carlo Tree Search (MCTS) is presented as a method to generate diverse and high-quality data.

- Off-Policy Data Collection: In contrast, off-policy data collection gathers data independently of the current policy. The authors list several seminal datasets like WebGPT, OpenAI's Human Preferences, HH-RLHF, and SHP, which are commonly used in off-policy settings.

Feedback Mechanisms

Feedback plays a critical role in preference learning, and the paper categorizes it into:

- Direct Feedback: This includes human annotations, heuristic rules, and metrics for specific tasks such as mathematical reasoning, machine translation, and code generation.

- Model-based Feedback: This involves training a reward model or using pair-wise scoring models. Notably, LLM-as-a-Judge approaches are also presented, where an LLM itself evaluates the generated outputs.

Algorithms

The paper provides an in-depth discussion on various optimization algorithms:

- Point-Wise Methods: Methods that optimize based on single data points, such as Rejection Sampling Fine-tuning (RAFT, Star), and Proximal Policy Optimization (PPO).

- Pair-Wise Contrasts: Methods that involve comparing pairs of samples to derive preferences, including Direct Preference Optimization (DPO), Identity Preference Optimization (IPO), and other DPO-like methods.

- List-Wise Contrasts: Extending pair-wise approaches to consider entire lists of preferences, exemplified by RRHF and Preference Ranking Optimization (PRO).

- Training-Free Methods: Techniques that optimize model performance without training, focusing either on input optimization (augmenting prompts) or output optimization (manipulating generated text distribution).

Evaluation

The evaluation of preference learning strategies is divided into:

- Rule-Based Evaluation: Utilizing standard metrics for tasks with ground-truth outputs, although these often fail to capture the nuances of open-ended tasks.

- LLM-Based Evaluation: Employing LLMs like GPT-4 as evaluators for tasks to provide a cost-effective alternative to human judgments. This method, while promising, suffers from biases and requires further refinement to ensure reliability.

Implications and Future Directions

The unified framework presented aims to synergize the strengths of various alignment strategies and provides several future research directions:

- Higher Quality and Diverse Data: Techniques to generate or synthesize high-quality preference data are pivotal.

- Reliable Feedback Mechanisms: Extending reliable feedback methods across diverse domains is crucial.

- Advanced Algorithms: Developing algorithms that are more robust, efficient, and capable of scaling up is essential.

- Comprehensive Evaluations: Enhancing evaluation methods to be more comprehensive, reliable, and unbiased is imperative.

Conclusion

This survey provides a well-structured and deeply insightful overview of the current landscape of preference learning for LLMs. By unifying various methods under a cohesive framework, it facilitates better understanding and future research in the field. The systematic approach to dissecting and categorizing existing strategies will serve as an invaluable resource for researchers aiming to advance the alignment of LLMs with human preferences.