An Analysis of the "When Do Program-of-Thought Works for Reasoning?" Paper

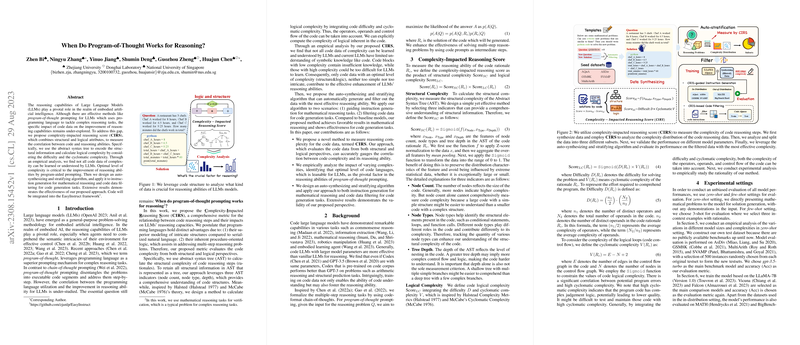

This paper investigates the reasoning capabilities of LLMs in the context of embodied artificial intelligence, specifically through the lens of program-of-thought prompting. The authors address an under-explored aspect of how code data impacts the enhancement of reasoning capabilities in LLMs. By introducing the Complexity-Impacted Reasoning Score (CIRS), the paper provides a metric that correlates code and reasoning abilities by integrating structural and logical attributes of code.

Key Contributions

The primary contribution of this work is the development of the Complexity-Impacted Reasoning Score. This metric evaluates code reasoning steps by utilizing abstract syntax trees (AST) to encode structural information and Halstead and McCabe's theories to assess logical complexity. The integration of these elements allows for a nuanced understanding of which complexities in code are most beneficial for reasoning tasks in LLMs.

Additionally, the authors propose an auto-synthesizing and stratifying algorithm designed to optimize instruction generation for mathematical reasoning and to filter code data for generation tasks. This approach aims to investigate the impact of code complexity on LLM performance systematically.

Empirical Analysis

The paper presents a rigorous empirical analysis of how different complexities in code data affect reasoning abilities. The findings reveal that LLMs do not uniformly learn from all complexities of code data. It identifies that code with an optimal level of complexity—neither too simple nor too complex—facilitates the most effective enhancement of LLM reasoning capabilities.

The empirical results suggest that as model parameters grow, LLMs exhibit improved reasoning abilities, aligning with current understandings of LLM proficiency. However, current LLM architectures encounter limitations when reasoning about complex symbolic knowledge, illustrating an area for potential future exploration in model design.

Implications and Future Directions

The implications of this paper are twofold. Practically, it offers a methodology to enhance the reasoning skills of LLMs through well-curated code data, enabling more effective program-of-thought prompting methods. Theoretically, it provides insights into the relationship between code complexity and reasoning ability, suggesting that future developments in AI might benefit from exploring architectures that natively understand complex, structured data more effectively.

This research opens avenues for further exploration in the design and application of LLMs, particularly in environments requiring intricate reasoning capabilities. Future work could extend these findings to other domains such as commonsense reasoning, integrating advanced model architectures or leveraging external tools to support complex reasoning tasks.

In conclusion, this paper contributes a detailed framework for understanding and optimizing the reasoning capabilities of LLMs through program-of-thought prompting. Its findings offer valuable insights into the importance of data complexity in model training, guiding future research towards enhanced reasoning methodologies in artificial intelligence.