Knowledge Graph Prompting for Multi-Document Question Answering

The paper "Knowledge Graph Prompting for Multi-Document Question Answering" explores a novel approach to enhance the multi-document question answering (MD-QA) capabilities of LLMs through the integration of knowledge graphs (KGs). While the current LLM paradigm of pre-train, prompt, predict has made significant strides in tasks like open-domain question answering (OD-QA), its application in MD-QA has been limited. MD-QA is inherently more complex, requiring comprehension of logical relations across documents and various document structures. This paper addresses these challenges by proposing a Knowledge Graph Prompting (KGP) method.

Methodology

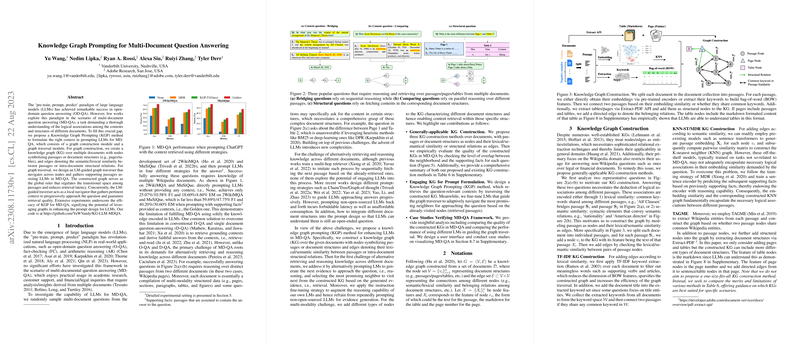

The KGP method consists of two main components: graph construction and graph traversal.

- Graph Construction: A knowledge graph is constructed over multiple documents to represent passages or document structures as nodes and their semantic/lexical similarities as edges. The techniques for constructing these graphs include:

- TF-IDF: Utilizing common keywords to connect passages.

- KNN-ST/MDR: Employing sentence transformers for semantic similarity.

- TAGME: Utilizing Wikipedia entities to construct graph edges.

- Graph Traversal: An LLM-based graph traversal agent is designed to navigate these graphs efficiently, selecting nodes that bring the system closer to answering the question. This agent benefits from instruction fine-tuning, which enhances its reasoning capabilities.

Performance and Evaluation

Extensive experiments are conducted across various datasets such as HotpotQA, IIRC, 2WikiMQA, and MuSiQue. The results demonstrate the efficacy of KGP in improving MD-QA performance over several baseline methods such as TF-IDF, BM25, DPR, and MDR. Notably, KGP shows substantial improvements in datasets requiring multi-hop reasoning, validating its ability to effectively integrate contextual information.

Key Findings

- Graph Quality: The construction of a high-quality knowledge graph is critical, with the performance heavily dependent on the information encoded within nodes and edges.

- Traversal Strategy: The LLM-based traversal agent's instruction fine-tuning significantly enhances reasoning, outperforming traditional retrieval methods.

- Scalability: While effective, the approach’s scalability is limited by the current graph construction methodologies, especially when extended to large document collections.

Implications and Future Directions

The integration of knowledge graphs into LLM-based QA systems opens new avenues for efficiently handling complex, multi-document scenarios. The findings indicate potential to further refine graph construction techniques, particularly for domains extending beyond standard datasets like Wikipedia. Future endeavors may focus on enhancing the graph's scalability and developing more sophisticated integration techniques for document structural understanding.

Overall, the paper's contributions highlight the promising role of knowledge graphs in advancing the field of MD-QA, presenting a structured way to harness both document content and structure in serving LLMs. As LLMs evolve, the proposed method could inspire further innovations in retrieval-augmented generation and prompt design, paving the way for more nuanced AI interactions with multi-source information.