An Overview of UniVTG: A Unified Framework for Video-Language Temporal Grounding

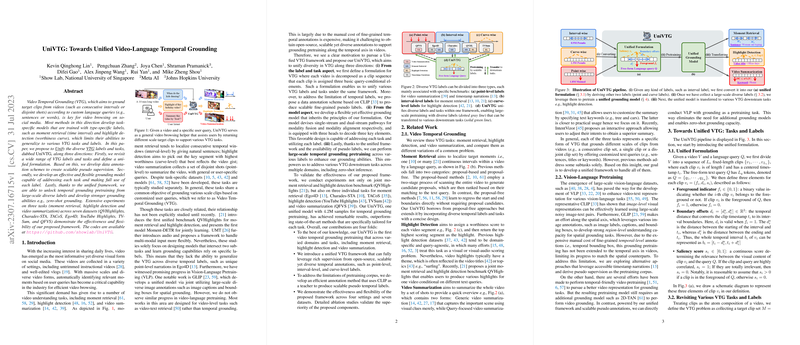

The paper introduces UniVTG, a framework devised to unify Video Temporal Grounding (VTG) tasks from diverse benchmarks with various labeling types and tasks. The motivation stems from the proliferation of video sharing on platforms like social media, necessitating efficient systems for video browsing based on described queries. UniVTG aims to circumvent the limitations of task-specific models by defining a universal method for VTG that accommodates multiple tasks and label formats, making use of scalable pseudo-supervision from pretraining datasets.

Key Components and Methodology

UniVTG operates on three fundamental tenets:

- Unified Formulation: The paper redefines VTG tasks under a unified formulation where each video is divided into clips, with each clip assigned three core elements: foreground indicator, boundary offsets, and saliency score. This formulation allows for a universal approach to different VTG labels and tasks. Furthermore, a label scaling technique is introduced to create pseudo labels using CLIP, thereby reducing the requirement for expensive manual annotations.

- Flexible Model Design: UniVTG proposes a grounding model that integrates both single-stream and dual-stream architectures to facilitate effective multi-modal fusion. The model is structured to decode the three core elements, supporting numerous VTG tasks. This design leverages recent advancements in Vision-Language Pretraining, enhancing temporal grounding abilities, and enabling zero-shot learning capabilities without additional task-specific models.

- Pretraining Across Diverse Labels: By employing the unified framework, UniVTG conducts large-scale temporal grounding pretraining using varied labels, encompassing point-level, interval-level, and curve-level labels. This approach results in improved grounding capabilities that translate effectively to multiple downstream tasks and domains, including moment retrieval, highlight detection, and video summarization.

Experimental Insights

Comprehensive experiments demonstrate UniVTG's flexibility and efficacy across seven diverse datasets, including QVHighlights, Charades-STA, TACoS, Ego4D, YouTube Highlights, TVSum, and QFVS. The framework shows promising results, often surpassing task-specific state-of-the-art methods. Notably, large-scale pretraining significantly improves performance metrics across all tested benchmarks, showing increases in retrieval recall and mean average precision, alongside impressive zero-shot learning capabilities. The experimental results signify the potential of a unified framework to handle diverse VTG challenges without heavily relying on manual, task-specific labeling efforts.

Implications and Future Directions

The implications of UniVTG are twofold. Practically, it promises more efficient and accurate video moment retrieval, highlight detection, and summarization, which can enhance user experiences on video-heavy platforms. Theoretically, it paves the way for further exploration into unified frameworks that promise scalability and adaptability across tasks previously considered disjoint. Future research could explore refining pseudo-labeling methods to further diminish the need for expensive annotations, and expanding the range of VTG tasks to include more complex query types.

In conclusion, the paper makes a substantive contribution by proposing a comprehensive, scalable method that unifies disparate VTG tasks and labeling schemes, supported by robust pretraining techniques. This work opens avenues for broader applications and future research in the field of video-language understanding.