Chain-of-Thought Prompt Distillation for Multimodal Named Entity Recognition and Multimodal Relation Extraction

The paper "Chain-of-Thought Prompt Distillation for Multimodal Named Entity Recognition and Multimodal Relation Extraction" by Feng Chen and Yujian Feng investigates a novel methodology designed to distill the reasoning capabilities of LLMs into more compact models for complex tasks within Multimodal Named Entity Recognition (MNER) and Multimodal Relation Extraction (MRE).

Introduction and Background

Multimodal Named Entity Recognition (MNER) and Multimodal Relation Extraction (MRE) combine text and visual inputs to identify entities and their relations in structured data. Historically, methods in this domain rely on retrieval augmentation from databases like Wikipedia to provide auxiliary context. The limitations of these retrieval-based methods, including inconsistencies with the current domain and difficulties in interpreting image-text pairs, highlight the need for improved techniques that can deliver more accurate and contextually consistent knowledge.

Contributions

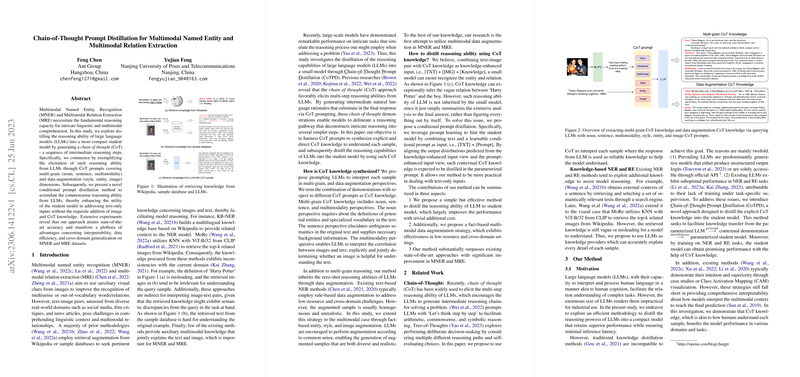

The paper introduces a Chain-of-Thought (CoT) Prompt Distillation (CoTPD) approach, which focuses on the following key aspects:

- Chain-of-Thought (CoT) Prompts: These are designed to elicit multi-step reasoning capabilities from LLMs through intermediate natural language rationales.

- Multi-Grain and Data-Augmentation Perspectives: The methodology incorporates different CoT prompts that cover noun, sentence, multimodality, style, entity, and image dimensions.

- Conditional Prompt Distillation: This new method aims at transferring the distilled commonsense reasoning abilities of LLMs into a student model using learnable prompts aligned with the original task requirements.

- Enhanced Interpretability and Generalization: The CoTPD method aims not only to provide state-of-the-art performance but also to enhance interpretability, data efficiency, and cross-domain generalization.

Methodology

CoT Prompts for LLMs

The paper designs CoT prompts to query LLMs to generate explanations needed to understand complex multi-modal data. Specifically:

- Noun Perspective: Prompts to explain the meaning of specialized words and entities.

- Sentence Perspective: Prompts to provide backgrounds and contexts for sentences.

- Multimodality Perspective: Prompts to clarify the relationship between the text and its associated images.

- Data Augmentation Prompts: These prompts are used for creating varied yet realistic augmented training datasets supporting different linguistic styles and entities.

Conditional Prompt Distillation

To distill CoT knowledge into a compact model, this approach uses a conditional prompt generator, which consists of a transformer decoder with learnable queries. These queries are used to generate conditional prompts that act as hints for the student model to streamline its understanding process aligned with newly acquired CoT knowledge. The system aligns the output distributions predicted from the knowledge-enhanced input and the prompt-enhanced input using Kullback-Leibler divergence minimization.

Experimental Results

The experimental results on several benchmark datasets (Twitter2015, Twitter2017, SNAP, WikiDiverse, and MNRE) showcase the efficacy of the proposed method. The approach notably outperforms existing state-of-the-art methods. For instance, CoTPD achieves an improvement over MoRe by 0.82% F1 on Twitter2015 and 5.96% F1 on MNRE.

Ablation Studies and Effectiveness

Ablation studies emphasize the significant contribution of each component of the CoT knowledge (noun, sentence, multimodality, style, entity, and image augmentation). The conditional prompt distillation (CPD) proves more effective than traditional multi-view alignment, especially for high-level CoT knowledge.

Implications and Future Directions

Practical Implications

The proposed method can significantly enhance the interpretability, data efficiency, cross-domain generalization, and performance of existing multimodal models in practical applications. Specifically, it enables models to effectively leverage LLM's reasoning capabilities, ultimately resulting in a smaller, faster, and more efficient student model while still maintaining high performance.

Theoretical Implications

From a theoretical perspective, the method addresses core issues in knowledge distillation, particularly in the context of multi-modal input comprehension. It also extends the applicability of CoT approaches to more intricate and real-world settings.

Future Developments

Future research may focus on expanding this methodology to other complex AI tasks beyond MNER and MRE. Moreover, the approach could be extended to incorporate even larger multimodal datasets and more sophisticated LLMs, such as the future generations of GPT models.

In conclusion, the paper presents a significant advancement in the distillation of LLM’s reasoning capabilities into more compact and efficient models for multimodal tasks, combining interpretability and performance in a balanced approach that offers substantial promise for future research and applications.