Enhancing AI with Structured Knowledge: A Pathway to Smarter Models

Introduction to Knowledge Integration in AI

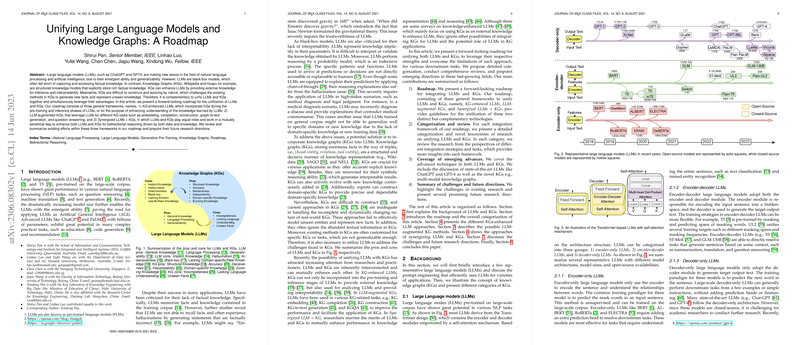

The integration of structured knowledge into AI systems is a groundbreaking development in NLP and AI. This paper presents a comprehensive roadmap for merging LLMs, such as ChatGPT and GPT-4, with knowledge graphs (KGs) like Wikipedia. LLMs exhibit impressive language processing capabilities but often lack access to updated and factual knowledge. Conversely, KGs store explicit and structured factual knowledge but are static and challenging to evolve. Unifying LLMs with KGs aims to leverage the strengths of both to address their individual limitations.

Roadmap for Unifying LLMs with KGs

The proposed roadmap outlines three frameworks where LLMs and KGs are combined to enhance one another:

- KG-Enhanced LLMs: KGs are used to improve the pre-training, inference, and interpretability of LLMs. By incorporating KG information during LLM pre-training, the models can learn from explicit knowledge sources. During inference, dynamically accessing KGs enables LLMs to produce more accurate and up-to-date responses. Lastly, leveraging KGs also aids in interpreting and probing the knowledge within LLMs.

- LLM-Augmented KGs: LLMs enhance KG-related tasks by using their language understanding capabilities. They help in KG embedding, completion, and construction, as well as in generating text from graph structures and answering questions based on KGs.

- Synergized LLMs + KGs: This approach aims for a mutual enhancement where both LLMs and KGs contribute equally to tasks that require reasoning driven by both data and knowledge, such as bidirectional reasoning.

Applications in AI

The collaboration between LLMs and KGs has profound implications across various applications. It bolsters AI assistants, recommendation systems, and web search capabilities. For instance, ChatGPT-like models with KG support can offer more knowledgeable interactions, while domain-specific KGs aid AI in providing accurate recommendations and medical diagnoses.

Challenges and Future Research

Despite the progress, several challenges remain. One significant issue is the "hallucination" in LLMs, where models generate factually incorrect information. Detecting and correcting such errors is critical for reliable AI applications. Another challenge is the "black box" nature of some LLMs, limiting the ability to incorporate or edit structured knowledge. Moreover, extending these methods to multi-modal data, which includes visual and auditory information alongside text, represents an exciting direction for future research.

Concluding Thoughts

The union of LLMs and KGs holds the promise of AI systems that are not only linguistically adept but also knowledgeable and factually accurate. As research continues to address the challenges in this domain, we are likely to see AI that can understand and interact with the world in more sophisticated ways, driven by the synergies of structured knowledge and language proficiency.