Full Parameter Fine-tuning for LLMs with Limited Resources

Introduction

The paper "Full Parameter Fine-tuning for LLMs with Limited Resources" by Kai Lv, Yuqing Yang, Tengxiao Liu, Qinghui Gao, Qipeng Guo, and Xipeng Qiu addresses a critical bottleneck in the field of NLP: the significant hardware requirements necessary to fine-tune LLMs. The authors introduce a new optimization technique, LOw-Memory Optimization (LOMO), which aims to facilitate full parameter fine-tuning of LLMs without necessitating extensive hardware resources.

Background

Current LLMs, including those with 30 billion to 175 billion parameters, necessitate extensive GPU memory for fine-tuning, often requiring multiple high-capacity GPUs, thereby limiting accessibility for smaller research labs. Previous approaches, such as parameter-efficient fine-tuning methods like LoRA and Prefix-tuning, while reducing resource requirements, fail to provide the comprehensive adaptability and performance that full parameter fine-tuning offers.

Contributions

The primary contributions of this work are as follows:

- Theoretical Analysis: The authors provide a theoretical foundation suggesting that Stochastic Gradient Descent (SGD) is competent for fine-tuning LLMs. Importantly, the typical challenges of SGD, such as handling large curvature loss surfaces, local optima, and saddle points, are less problematic in the smooth parameter spaces of LLMs.

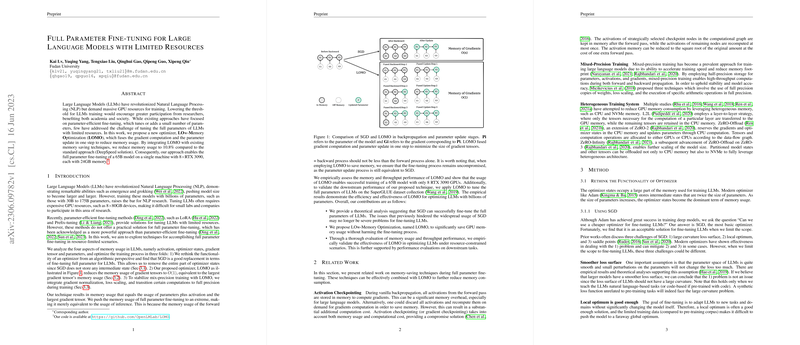

- LOMO Optimizer: LOMO, the proposed optimizer, merges gradient computation with parameter updates, thus minimizing gradient tensor memory usage to the scale of the largest single gradient tensor rather than the cumulative size of all gradients.

- Memory Efficiency: A thorough empirical evaluation demonstrated that LOMO reduces memory consumption dramatically, enabling the fine-tuning of a 65 billion parameter model on a single machine with 8 RTX 3090 GPUs.

Methodology

LOMO's effectiveness is based on three components:

- SGD Utilization: By leveraging the simplicity of SGD, the authors eliminate the need for storing intermediate optimizer states, which are typically substantial for advanced optimizers like Adam.

- Fusion Update: LOMO integrates gradient computations directly with parameter updates during backpropagation. This innovative approach negates the necessity of storing gradient tensors, thus reducing memory overhead significantly.

- Precision Stabilization: To combat issues arising from mixed-precision training, such as precision degradation, LOMO incorporates techniques like gradient normalization, loss scaling, and selective full-precision computations.

Experimental Results

The experimental setup demonstrates the substantial benefits of LOMO in terms of memory allocation, throughput, and downstream task performance:

- Memory Profiling: In substituting AdamW with LOMO, memory usage for fine-tuning a 7B parameter model decreased from 102.2 GB to 14.58 GB, attributed to the optimized handling of gradient and optimizer states.

- Throughput: The throughput performance of LOMO significantly surpasses that of traditional optimizers. For instance, LOMO achieves an 11-fold improvement in training throughput for a 7B parameter model compared to AdamW.

- Downstream Performance: Evaluations on SuperGLUE datasets using various LLM scales (7B, 13B, 30B, 65B) demonstrate that LOMO not only competes well with, but often exceeds, the efficiency of parameter-efficient fine-tuning methods such as LoRA, particularly as the model scale increases.

Implications and Future Directions

The implications of LOMO are profound for both practical and theoretical aspects of NLP:

- Practical Implications: LOMO drastically lowers the hardware barrier for engaging in high-quality NLP research, democratizing access to advanced LLM fine-tuning capabilities. Providing a robust mechanism to fine-tune models with billions of parameters on consumer-grade hardware can accelerate the pace of innovation and adoption in smaller research and industry settings.

- Theoretical Implications: The performance of LOMO suggests that the smoothness of loss surfaces in large models may be a pivotal factor in the feasibility of SGD-based optimization for fine-tuning. This warrants further exploration into the optimization landscapes of LLMs and the potential for more sophisticated, yet efficient, optimization algorithms.

Conclusion

The paper "Full Parameter Fine-tuning for LLMs with Limited Resources" robustly demonstrates that full parameter fine-tuning of LLMs can be achieved within the constraints of modest hardware configurations using the LOMO optimizer. This innovation not only broadens accessibility to LLM fine-tuning but also sets a new benchmark in memory-efficient model training. Future research may explore parameter quantization techniques and further theoretical analyses to continue pushing the boundaries of LLM optimization. The LOMO approach marks a significant step towards making high-performance NLP research more inclusive and efficient.