LISA: A Novel Approach for Efficient LLM Fine-Tuning

Introduction to LISA

The quest for enhancing the efficiency of fine-tuning LLMs has led to the development of the Layerwise Importance Sampled AdamW (LISA). This approach targets a significant hurdle in the utilization of LLMs: the excessive memory consumption during large-scale training. While existing Parameter Efficient Fine-Tuning (PEFT) techniques, notably Low-Rank Adaptation (LoRA), have made strides in addressing this issue, they have not consistently outperformed full parameter training across all settings. LISA emerges as a strategic alternative by leveraging the layerwise properties of LoRA to optimize memory usage and training performance.

Motivation and Key Observations

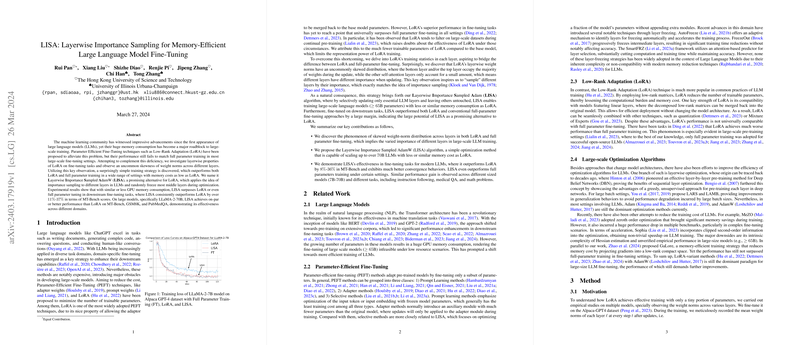

The motivation behind LISA stems from an insightful analysis of LoRA's performance across different layers of LLMs. A notable skewness was observed in the weight norms across layers when employing LoRA for fine-tuning tasks. This uneven distribution of weight norms suggests a varied importance of layers in the training process—a foundational observation that inspired the development of LISA. By applying the concept of importance sampling strategically to LLM layers, LISA selectively updates only crucial layers, thereby significantly reducing memory consumption while enhancing or maintaining training effectiveness.

The LISA Algorithm

LISA operates by applying AdamW optimization selectively across layers based on predetermined probabilities, thereby freezing a majority of the middle layers during optimization. This selective updating process is designed to closely emulate LoRA's skewed updating pattern but without the inherent limitations tied to LoRA's low-rank space. Experimental results have bolstered LISA's potential, demonstrating its capability to outperform both LoRA and full parameter training across various settings with lower or similar memory costs.

Experimental Evaluation and Results

Extensive evaluations reveal LISA's impressive performance in fine-tuning tasks for modern LLMs. It consistently outperformed LoRA by over 11% to 37% in terms of MT-Bench scores and exhibited superior performance on large models like LLaMA-2-70B across different domains, including instruction following, medical QA, and math problems. Furthermore, LISA showed remarkable memory efficiency, enabling the training of models up to 70B parameters with reduced GPU memory consumption in comparison to LoRA.

Implications and Future Directions

The introduction of LISA marks a significant advancement in the field of LLM fine-tuning. Its memory-efficient training strategy offers a practical solution to the challenges associated with large-scale LLM training. The strong numerical results and the ability to surpass existing PEFT techniques underscore LISA's potential as a promising tool for future research and applications involving LLMs. Looking ahead, further exploration into optimized layerwise importance sampling strategies and extending LISA's application to even larger models are promising directions for extending LISA's utility in the field of AI.

In summary, LISA's innovative approach to layerwise importance sampling represents a notable leap forward in the efficient and effective fine-tuning of LLMs. Its ability to conserve memory while delivering improved performance metrics opens new avenues for research and practical applications of LLMs across various domains.