PandaLM: An Advanced Benchmark for Instruction Tuning Evaluation of LLMs

The paper introduces PandaLM, a significant contribution to the domain of instruction tuning for LLMs. The authors emphasize an automated and reliable evaluation benchmark, addressing key challenges such as evaluation accuracy and privacy protection. The proposed PandaLM is a judge LLM, leveraging subjective factors like conciseness and clarity beyond the typical correctness metrics, and achieves comparable evaluation abilities to renowned models like GPT-3.5 and GPT-4.

Methodological Innovations

PandaLM goes beyond traditional evaluation metrics by incorporating a diverse set of subjective criteria, such as adherence to instruction and formality. The foundational model for PandaLM is LLaMA-7B, finetuned to distinguish the optimal model among various candidates. The inclusion of human-annotated datasets ensures its alignment with human preferences, enhancing its reliability and adaptability to diverse contexts.

The training data for PandaLM comprises responses from several models like LLaMA-7B, Bloom-7B, Cerebras-GPT-6.7B, OPT-7B, and Pythia-6.9B, finetuned using standard Hyperparameters. The paper details a comprehensive approach to data filtering, utilizing GPT-3.5 as a baseline and employing heuristic strategies to minimize noise.

Evaluation and Results

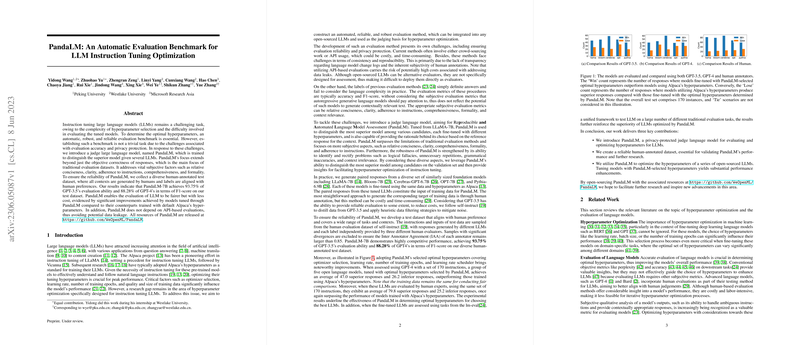

PandaLM's evaluation reliability was demonstrated through competitive F1-scores, capturing 93.75% of GPT-3.5's and 88.28% of GPT-4's evaluation capabilities on a diverse, human-annotated dataset. The model's robustness extends to automatic hyperparameter optimization, leading to a substantial improvement in LLM performance over default configurations, as evidenced by empirical evaluations.

The introduction of PandaLM-selected optimal hyperparameters marked significant advancements over those trained with conventional Alpaca parameters. For instance, the accuracy of models like Bloom and Cerebras was notably improved by leveraging PandaLM's guidance.

Implications and Future Directions

PandaLM offers a path toward more robust and efficient LLM instruction tuning, reducing reliance on costly API-based evaluations and mitigating data leakage risks. Its open-source nature (available at GitHub) promotes transparency and reproducibility, essential for future research advancements.

Looking ahead, the paper suggests the potential extension of PandaLM to support larger models and more comprehensive datasets. The integration with low-rank adaptation methods (LoRA) also provides a promising avenue for future research, although full fine-tuning currently shows superior performance.

Conclusion

Overall, the PandaLM framework presents a well-rounded solution for the evaluation and optimization of instruction-tuned LLMs. By prioritizing subjective evaluation metrics and ensuring privacy protection, the model paves the way for enhanced model tuning processes and further theoretical exploration within machine learning. This work exemplifies how innovative methodologies can lead to substantial improvements in the development and assessment of LLMs, with practical implications across various AI applications.