An Overview of "A Survey on Fairness-aware Recommender Systems"

The paper "A Survey on Fairness-aware Recommender Systems" by Jin et al. provides an exhaustive review of methodologies and practices for integrating fairness into recommender systems. It presents a structured exploration of biases that affect these systems and delineates various strategies employed to mitigate such biases across different stages of a recommender system's lifecycle. This survey caters to growing concerns about fairness in automated systems, especially in the domains where these recommenders are actively deployed such as e-commerce, education, and social media.

Key Insights from the Paper

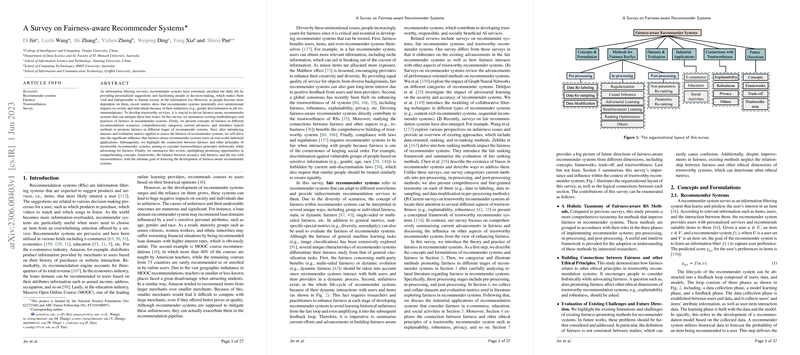

The paper identifies and categorizes the forms of bias that lead to unfairness in recommender systems into three primary phases: data collection, model learning, and feedback loop. It highlights specific biases such as user bias, exposure bias, time bias, cold-start bias, ranking bias, and the feedback loop-induced popularity bias. Each of these biases affects the fairness of recommendations differently, necessitating tailored strategies to address them.

The authors define fairness in recommender systems from multiple perspectives, including individual versus group fairness, static versus dynamic fairness, and single-sided versus multi-sided fairness. They argue that fairness should resonate with both protected and advantaged groups across the user-item spectrum in varying recommendation scenarios.

Methodologies for Fairness

The survey sorts methodologies for achieving fairness into three main stages:

- Pre-processing Methods: These focus on manipulation of the training data via techniques like data re-labeling, re-sampling, and modification to reduce inherent biases before they affect the model training phase.

- In-processing Methods: This stage incorporates fairness into model training itself, employing strategies such as regularization, causal inference, adversarial learning, reinforcement learning, and ranking optimization. These methods aim to create models that inherently account for fairness while learning from data.

- Post-processing Methods: Post-training adjustments are performed on model outputs, treating these models as black boxes. Both non-parametric and parametric re-ranking methods are explored to ensure fairer recommendation outputs.

Implications and Applications

The authors illustrate the practical impact of fairness-aware recommender systems in real-world applications across e-commerce, education, and social activities. They show how biases influence recommendation outcomes and outline how fairness-aware techniques can mitigate such impacts, fostering trustworthiness in systems.

Moreover, the paper addresses the significance of fairness in conjunction with other trustworthy principles like explainability, robustness, and privacy. It emphasizes that fairness cannot be isolated from these attributes if comprehensive trust is to be built in recommender systems. For instance, causal methods provide explanations alongside debiasing mechanisms, implicitly promoting transparency and understanding of model operations.

Future Outlook

While the paper lays a substantial groundwork, future research directions identified include the development of universal frameworks for fairness, better definitions and metrics for fairness, nuanced trade-offs between fairness and system performance, and a deeper exploration of how fairness coalesces with other trustworthiness attributes. Ongoing challenges include optimizing fairness without compromising accuracy and developing adaptive frameworks that can scale across varying domains and fairness criteria.

In conclusion, Jin et al.'s survey highlights that fairness is not a standalone feature but an integrative aspect of trustworthy AI. The paper serves as a foundational guide for researchers and practitioners aiming to develop or enhance recommender systems that are equitable, transparent, and reliable. Understanding the multi-faceted nature of bias and strategically deploying fairness-aware methodologies are critical for advancing recommender systems in an increasingly automated world.