Integrating Object Detection Modality into Visual LLMs for Autonomous Driving Enhancements

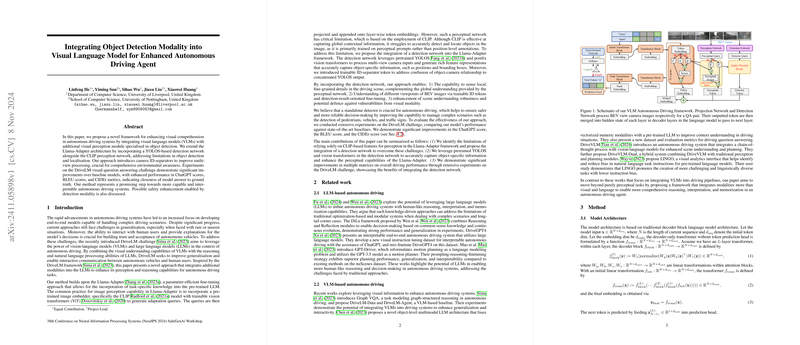

The research paper presents a novel framework that combines visual LLMs (VLMs) with object detection capabilities to enhance perception and decision-making in autonomous driving systems. The proposed system extends the Llama-Adapter architecture by integrating a YOLOS-based detection network alongside a CLIP perception network, thus addressing existing limitations in object detection and localization.

Methodology

This work focuses on extending the capabilities of LLMs and VLMs by introducing a dedicated object detection module. The key emphasis is on leveraging pre-trained YOLOS to accurately capture object-specific information such as positions and bounding boxes, while utilizing the CLIP model for perceptual embeddings. The adaptation of YOLOS facilitates detailed analysis of various viewpoints through the use of trainable ID-separator tokens, which are crucial for distinguishing object-camera relationships and improving multi-view processing for environmental awareness.

Results

The experimental evaluation utilizes the DriveLM challenge dataset to validate the effectiveness of the proposed method. The results demonstrate significant improvements over baseline models, such as enhanced ChatGPT scores, BLEU scores, and CIDEr metrics, all indicating a closer alignment of model answers with ground truth answers. Notably, the integration of object detection into the Llama-Adapter framework provides a marked improvement in the handling of complex driving scenarios, notably in localizing objects like pedestrians, vehicles, and traffic signs, which is critical for safe autonomous navigation.

Implications

The implications of this research are wide-reaching both practically and theoretically. Practically, the integration of object detection into VLM-based autonomous driving systems improves scene understanding and interaction capabilities, paving the way for more robust and interpretable decision-making processes. From a theoretical standpoint, the research exemplifies a successful application of transformer models in vision tasks, stimulating interest in further exploration of multi-modal data integration strategies within LLMs to boost real-world AI applications.

Future Work and Challenges

Despite the promising improvements observed, challenges remain. The dependency of the framework on high-quality and diverse datasets necessitates broader evaluations across varied environmental conditions to ensure robustness and efficacy in real-world scenarios. Furthermore, computational complexities associated with such integrated systems might challenge real-time application deployments, especially where resource constraints exist. Additionally, enhancing the defense mechanisms against visual attacks through multi-modal data processing represents a potential area of exploration, especially given the vulnerabilities exposed by prior studies in vision-LLMs.

Conclusion

In conclusion, the proposed integration of object detection modalities into visual LLMs within autonomous driving systems represents a significant step forward in developing more capable and interpretable AI agents. By advancing the Llama-Adapter architecture with YOLOS and introducing multi-view processing through innovative use of ID-separators, the research achieves notable improvements across key performance metrics. Future research directions include refining computational efficiency and exploring further integration of diverse data modalities for an enhanced, comprehensive understanding of driving environments.