Enhancing Chat LLMs by Scaling High-quality Instructional Conversations

The paper "Enhancing Chat LLMs by Scaling High-quality Instructional Conversations" addresses the critical task of advancing open-source conversational models. The primary contribution lies in the introduction of UltraChat, a systematically designed dataset intended to improve the performance of LLMs beyond current standards.

Dataset Design and Construction

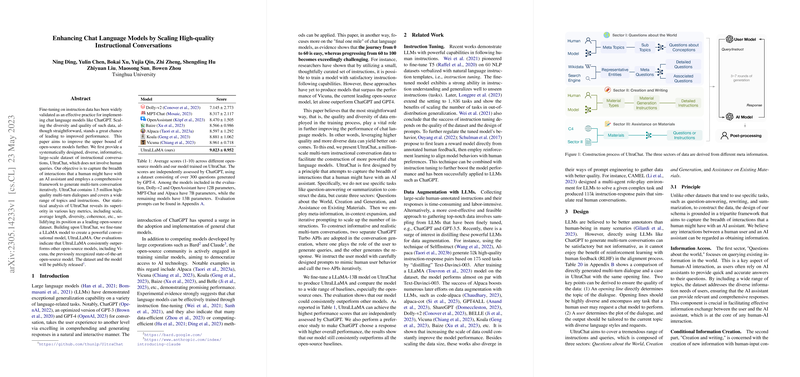

UltraChat is a significant endeavor, consisting of 1.5 million dialogues that aim to encapsulate the vast range of interactions humans may have with AI assistants. Notably, UltraChat does not rely on human queries, a strategic decision to scale efficiently. The dataset is divided into three sectors: "Questions about the World," "Creation and Generation," and "Assistance on Existing Materials." This taxonomy provides a multi-faceted approach to data diversity and richness, crucial for model training.

The dataset's creation involved leveraging meta-information and advanced data expansion techniques, including iterative prompting with ChatGPT Turbo APIs to simulate user interactions. The statistical analysis highlights UltraChat's superiority in terms of scale, average dialogue length, and both lexical and topic diversity, establishing it as a leading dataset in this domain.

Model Development: UltraLLaMA

By fine-tuning a LLaMA model on UltraChat, the authors introduce UltraLLaMA, a robust conversational model. Evaluation results demonstrate that UltraLLaMA consistently outperforms other open-source models such as Vicuna, Alpaca, and Koala. Notably, the model achieves the highest scores in the assessment conducted by ChatGPT, demonstrating its enhanced performance across various evaluation sets, including commonsense reasoning and professional knowledge tasks.

The model's architecture is based on a LLaMA-13B backbone, optimized through a careful training process that integrates full dialogue context understanding and places particular focus on response accuracy and relevance.

Implications and Future Directions

The findings suggest that enhancing the quality and diversity of training data is pivotal in pushing the boundaries of chat model capabilities. UltraLLaMA's success highlights the potential to create more sophisticated AI systems that can better understand and respond to complex human inquiries.

Practically, the improved models can be integrated into applications that require advanced conversational capabilities, enhancing user experiences in customer service, education, and virtual collaboration environments. Theoretical implications extend to further understanding of model scaling and data efficiency in instruction-following tasks.

Future developments could explore multilingual data expansion and integration of additional contextual knowledge, potentially increasing model generalizability and effectiveness across varied domains. Furthermore, ongoing work should address the challenges of model hallucination and alignment with human ethical standards to mitigate potential risks.

This paper significantly contributes to the field by providing a high-quality resource and a superior model, positioning UltraLLaMA as a benchmark for future research in open-source conversational AI.