An Analysis of "Topical-Chat: Towards Knowledge-Grounded Open-Domain Conversations"

The paper "Topical-Chat: Towards Knowledge-Grounded Open-Domain Conversations" introduces a novel dataset aimed at advancing research in open-domain conversational AI. Authored by researchers at Amazon Alexa AI, the work addresses the need for conversational agents that engage in meaningful, knowledge-grounded dialogues across various topics. This dataset, called Topical-Chat, facilitates such interactions by spanning eight broad topics, allowing for conversations that are both deep and diverse in their coverage.

Contributions of Topical-Chat

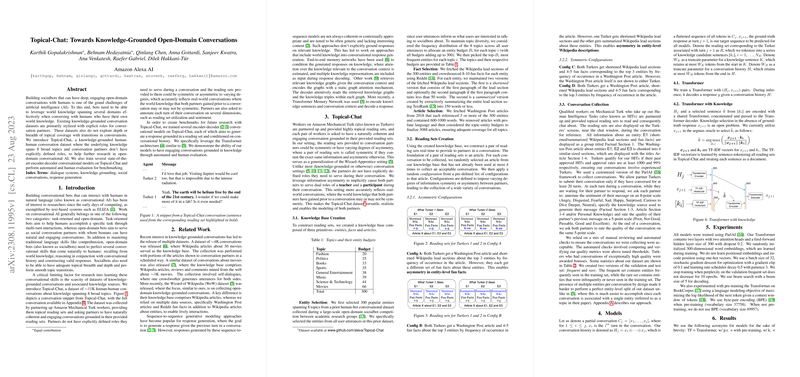

The primary contribution of this paper is the introduction of the Topical-Chat dataset, which fills a crucial gap in knowledge-grounded conversational datasets. Unlike existing datasets that often utilize structured roles or limited topical coverage, Topical-Chat allows participants to engage without predefined roles, thereby reflecting the dynamic nature of real-world conversations. The dataset comprises approximately 11,000 human-human conversations, with an expansive range of topics, including fashion, politics, books, and more, thus providing a comprehensive ground for training conversational models.

Methodology

Topical-Chat was curated using Amazon Mechanical Turk workers, who engaged in dialogues based on topical reading sets from diverse sources, including Wikipedia, Reddit, and Washington Post articles. This variety ensures a rich context for the conversations and simulates the asymmetry in knowledge typical of human interactions. By eliminating explicit conversational roles, the dataset encourages a fluid exchange of information where conversational partners can simultaneously act as knowledge bearers and learners.

Conversational Models and Evaluation

The dataset serves as a benchmark for training and evaluating conversational models. The authors implemented various state-of-the-art encoder-decoder models based on the Transformer architecture. These models were tasked with generating responses conditioned on conversation history and knowledge sets. The paper reports automated and human evaluations that reveal high diversity in generated responses and improvements when knowledge grounding is incorporated.

Automated Evaluation

Using metrics such as perplexity and unigram F1 scores, the paper evaluates the models on both frequent and rare subsets of the dataset. Notably, models grounded in knowledge exhibit improved F1 scores and demonstrate diverse and informative response generation. These metrics provide a quantitative assessment of the models' ability to leverage external knowledge effectively.

Human Evaluation

Human annotators assessed the responses' comprehensibility, topical relevance, and interest. The evaluation highlights that knowledge-grounded responses were perceived as more relevant and engaging, though there remains room for improvement in leveraging knowledge effectively. This demonstrates the potential of knowledge-grounded models to produce more naturally engaging dialogues.

Implications and Future Directions

The introduction of Topical-Chat has substantial implications for the development of open-domain conversational AI. It enables researchers to explore models that can handle the complexity of real-world dialogues without relying on structured interaction protocols. The dataset's breadth and realism make it a valuable resource for developing AI capable of nuanced understanding and response generation.

Future research may focus on addressing the limitations observed in current models' ability to fully exploit knowledge sources. Advancements might include more sophisticated knowledge selection mechanisms, improved integration of varied knowledge bases, and further refinement in the training of conversational agents. Such efforts could propel the efficacy of AI in applications ranging from customer service to virtual personal assistants.

Conclusion

Topical-Chat is a significant step forward in the arena of open-domain conversational AI. By providing a realistic and versatile dataset, it invites a reevaluation of conversational agent capabilities and sets the stage for robust AI that can seamlessly navigate complex, knowledge-rich interactions with humans. The potential advancements in this field could lead to more intelligent systems that are not only contextually aware but also deeply engaging to interact with.