An Analysis of LLM-EVAL: A Multi-Dimensional Evaluation Method for Open-Domain Conversational Systems

Introduction

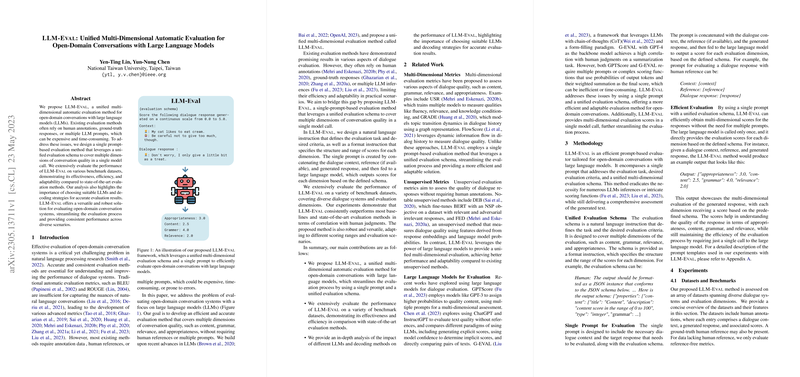

The paper presented in the paper "LLM-EVAL: Unified Multi-Dimensional Automatic Evaluation for Open-Domain Conversations with LLMs" proposes a novel approach to the evaluation of open-domain conversational systems, specifically targeting the capabilities of LLMs. Traditional evaluation methods such as BLEU and ROUGE are depicted as inadequate for capturing the complexities inherent in natural language dialogue. Moreover, existing advanced metrics often require extensive human annotation or numerous inference prompts, limiting their practicality in large-scale systems. This research addresses these limitations by introducing a unified evaluation schema capable of assessing multiple dimensions of dialogue quality through a single model prompt.

Methodology

LLM-EVAL innovatively leverages a single prompt framework, simplifying the evaluation process and reducing resource demands while maintaining multi-dimensional scrutiny. The methodology employs a unified evaluation schema that overlays criteria such as content, grammar, relevance, and appropriateness. A single prompt, constructed from dialogue context, response, and schema, is fed into the LLM, which produces evaluation scores based on predefined criteria. This approach contrasts with models requiring multiple prompts or complex probability-based scoring functions, making LLM-EVAL a more efficient alternative.

Experiments and Results

The empirical assessments conducted demonstrate LLM-EVAL's effectiveness across a variety of benchmark datasets, including DSTC10 and Persona-DSTC10. In these evaluations, LLM-EVAL consistently showed high correlation with human judgments, surpassing traditional and state-of-the-art metrics such as USR, GRADE, and FlowScore. Both configurations—scoring on a scale of 0-5 and 0-100—proved robust, with the 0-5 setting offering slight performance improvements. These results underscore the method's adaptability to different dialogue dimensions and its ability to outperform existing baselines.

Analysis of NLP Tools

A critical aspect of the LLM-EVAL framework is its reliance on dialogue-optimized LLMs. The paper contrasts various LLM implementations, including Anthropic Claude and OpenAI's ChatGPT, with broader text generation models such as GPT-3.5. The results indicate superior evaluation accuracy when using models tailored for conversational tasks, thereby highlighting the necessity of selecting appropriate base models for reliable assessment in open-domain contexts.

Implications and Future Work

By streamlining the evaluation process, LLM-EVAL offers a scalable solution for assessing dialogue systems, paving the way for more efficient benchmarks in NLP and conversational AI. Future research could extend this methodology by incorporating feedback loops and reinforcement learning, potentially enhancing the adaptability and precision of automated evaluation processes. Another avenue for exploration involves addressing inherent biases in LLMs that may influence evaluation outcomes.

Conclusion

LLM-EVAL represents a significant advance in the evaluation of open-domain conversational systems, offering a comprehensive yet streamlined approach that correlates strongly with human evaluation. Its adoption can facilitate more consistent and efficient assessment workflows, making it particularly relevant as LLM-based dialogue systems continue to evolve. Nonetheless, the dependence on LLMs necessitates ongoing scrutiny of model biases and further refinement of evaluation schemas to fully realize the method's potential.