Memory-Based Model Editing at Scale

The paper "Memory-Based Model Editing at Scale" proposes a novel approach to addressing limitations encountered in current model editing techniques for neural networks, particularly LLMs. As comprehensive evaluations have revealed, traditional model editing techniques exhibit challenges with expressiveness and scope accuracy, often culminating in degraded performance after numerous edits or when dealing with loosely related test inputs. In response, the authors introduce a memory-based approach called SERAC (Semi-Parametric Editing with a Retrieval-Augmented Counterfactual Model) designed to overcome these limitations by using an explicit memory system and a distinct reasoning mechanism for modulating the base model's predictions.

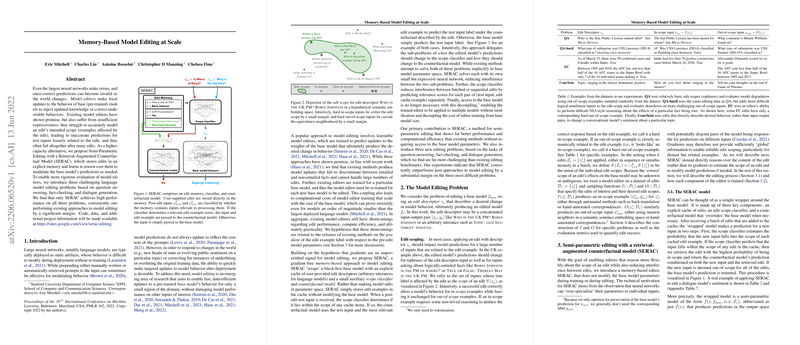

Approach

SERAC encapsulates a LLM within an external memory system, storing user-supplied edit descriptors without directly altering the model's weights. This decoupling from parameter space circumvents many issues associated with traditional gradient-based editing methods, which rely on the pre-edit model parameters—a signal deemed insufficient for effective scope management. SERAC's mechanism involves a combination of an edit memory, a classifier, and a counterfactual model. This suite collectively processes input and assesses its relevance to any cached edit examples. If a relevant edit is identified, the counterfactual model refines the desired outcome, otherwise, predictions are deferred to the base model.

Methodological Rigor

To expand the evaluation landscape, the authors introduce three challenging LLM editing tasks—question-answering, fact-checking, and dialogue sentiment modification. These tasks emphasize logical entailment, comprehensive factual knowledge updates, and response sentiment shifting, thus representing diverse real-world applications of model editing. In these tasks, SERAC consistently outperforms existing model editing solutions across a series of metrics, leading to significant improvements in edit success (ES) while maintaining minimal performance drawdown (DD) as fewer resources are consumed.

Evaluation and Results

The efficacy of SERAC is underscored by its performance across numerous test scenarios. Notably, when tasked with executing multiple simultaneous edits, SERAC achieves high and consistent edit success rates even as task complexity increases, something that traditional methods struggled with. For instance, in the QA-hard evaluation, SERAC achieves an edit success rate of 0.913 with very low drawdown compared to lower success and higher drawdown figures for alternate methods. This robustness was further demonstrated in scope-critical challenges like the FC and ConvSent problems, where SERAC's semi-parametric architecture yielded compelling results in sentiment editing tasks, reaffirming its utility and versatility.

Implications and Future Directions

The research elucidates that by employing an external memory coupled with a bespoke reasoning mechanism, it is possible to craft models that can be dynamically edited without entangling with the model's intrinsic parameters. This opens up new avenues for maintaining models' adaptability and relevance in rapidly evolving domains, where real-time updates are more of necessity than luxury.

However, SERAC is not without its limitations—chief among them being its potential dependence on the quality and scope of its training dataset for constructing the auxiliary classifier and counterfactual model. Additionally, while SERAC reduces interference between model edits, the edit memory could theoretically grow indefinitely, prompting future research on memory management techniques such as periodic semantic distillation into the base model.

The separation of editing logic from model parameters is a promising direction for scalable LLM updates and adaptation. Future work could also explore more resource-efficient architectures with enriched expressiveness, potentially integrating them with continual learning frameworks to manage memory and effectiveness seamlessly. As exciting as these prospects are, the ethical deployment of such technologies demands careful consideration to prevent potential misuse.

Overall, the paper provides the AI research community with a scalable mechanism to refine LLMs efficiently, widening the span of real-world applicability of AI while maintaining high levels of autonomy and precision in their operations.