Analysis of "mLongT5: A Multilingual and Efficient Text-To-Text Transformer for Longer Sequences"

The paper "mLongT5: A Multilingual and Efficient Text-To-Text Transformer for Longer Sequences" presents the mLongT5 model, addressing the challenge of efficient multilingual processing of long text sequences. The model extends the LongT5 architecture while incorporating multilingual capabilities akin to mT5, utilizing the mC4 dataset for pretraining, and adopting UL2's pretraining techniques.

Model Architecture and Pretraining

The mLongT5 model builds upon the efficient design of LongT5, which is known for handling long input sequences with a refined attention mechanism, mitigating the quadratic growth challenge in traditional transformers. The mLongT5 is pretrained using the extensive multilingual corpus mC4, which spans 101 languages. The pretraining methodology diverges from LongT5 by employing UL2's Mixture-of-Denoisers (MoD) tasks, which are versatile and better suited for multilingual settings compared to the original PEGASUS-based method. This approach avoids the limitations posed by sentence segmentation in diverse languages, which is crucial for effective multilingual training.

Three model sizes—Base, Large, and XL—were pretrained across 256 TPUv4 chips, with the larger models demonstrating scalable performance improvements on multilingual tasks. Pretraining configurations include a substantial batch size and token lengths, crucial for handling the extensive sequence inputs in question-answering tasks.

Evaluation and Results

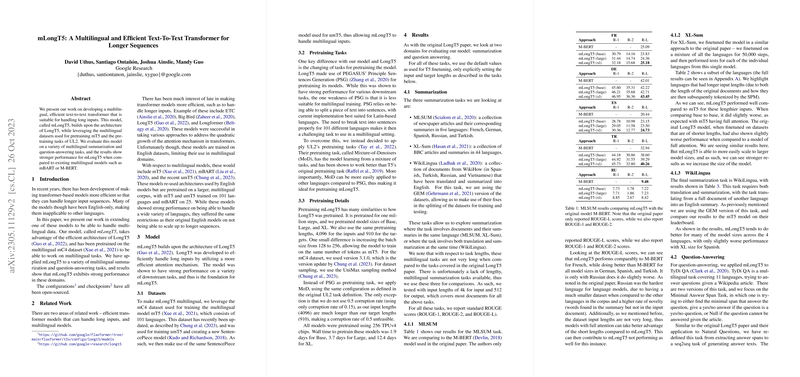

The evaluation focuses on summarization and question-answering tasks across multiple languages, highlighting mLongT5's superiority in these domains. For summarization, datasets such as MLSUM, XL-Sum, and WikiLingua were utilized. mLongT5 consistently outperformed baseline models such as mBERT, especially in languages like German, Spanish, and Turkish, though it exhibited slightly lower performance in Russian due to dataset-specific challenges. The results affirm mLongT5's efficiency in handling languages with longer text sequences and translation tasks, underscored by its higher ROUGE scores in these scenarios.

In the domain of question-answering, the TyDi QA dataset, which features significant input sequence lengths, served as the benchmark. mLongT5 excelled with its ability to process longer sequences efficiently, achieving higher Exact Match and F1 scores compared to mT5 at varying input lengths, substantiating the model's enhanced capability in dealing with extensive input data.

Implications and Future Directions

The introduction of mLongT5 contributes to the advancement of multilingual NLP models by demonstrating effective handling of longer sequences, a notable development for real-world applications requiring cross-lingual capabilities. The model's architecture and pretraining methodology provide a compelling case for adopting efficient attention mechanisms and diversified pretraining tasks in similar NLP challenges.

The success of mLongT5 in the tested domains suggests potential applications in complex multilingual text-processing tasks, potentially influencing subsequent developments in machine translation, document summarization, and multilingual information retrieval systems. Future work might explore optimizing the model for additional tasks or further enhancing its efficiency and scalability to accommodate even longer sequences or a wider array of languages.

The research delineated in this paper not only benchmarks mLongT5 against existing models but also carves a path for future exploration in extending the boundaries of multilingual and efficient transformers, particularly in the context of lengthy text processing. This creates a foundation for continued innovation and performance enhancements in multilingual artificial intelligence applications.