The paper "Graph-ToolFormer: To Empower LLMs with Graph Reasoning Ability via Prompt Augmented by ChatGPT" investigates enhancing LLMs to perform complex graph reasoning tasks. Recognizing the impressive advancements of LLMs in various natural language and multi-modal vision tasks, the authors identify significant limitations in the models' capacities for graph-related tasks. Specifically, these tasks require multi-step logical reasoning, precise mathematical calculations, and understanding spatial-temporal factors—areas where current LLMs falter.

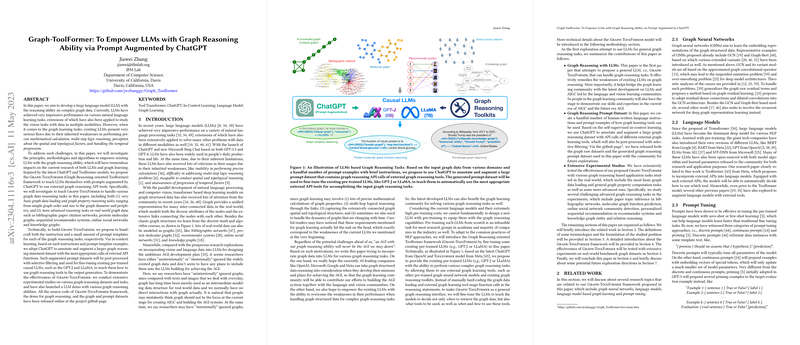

To address these deficiencies, the paper introduces the Graph-ToolFormer framework. This framework is inspired by techniques from ChatGPT and Toolformer models and aims to augment LLMs with enhanced graph reasoning capabilities. The core idea is to use prompts facilitated by ChatGPT to leverage external graph reasoning API tools effectively. This setup enables LLMs to handle both fundamental and advanced graph-related tasks.

Key components of the Graph-ToolFormer framework include:

- Basic Graph Data Reasoning:

- Tasks such as loading graph data.

- Analyzing graph properties like order (number of vertices), size (number of edges), diameter (longest shortest path), and periphery.

- Advanced Graph Reasoning Tasks:

- Handling real-world graph data, including bibliographic networks (e.g., citation networks).

- Analyzing protein molecules which involve complex biological data structures.

- Understanding sequential recommender systems which often rely on user-item interaction graphs.

- Interpreting social networks with intricate relational data.

- Working with knowledge graphs that encapsulate extensive interconnected information.

The authors argue that successfully imbuing LLMs with these abilities will have substantial impacts on the fields of both LLM development and graph learning. The Graph-ToolFormer framework promises to bridge the gap between the powerful language understanding of LLMs and the intricate demands of graph reasoning tasks.

This paper is significant as it proposes methodological innovations and opens up new avenues for research where LLMs can be effectively utilized for domain-specific tasks involving graph data, which has wide-ranging applications from scientific research to social media analytics.