Overview of Transformer-Based Visual Segmentation: A Survey

The paper "Transformer-Based Visual Segmentation: A Survey" provides an extensive review of the transformer architecture's application in visual segmentation tasks. The authors summarize recent advancements and emphasize the transition from traditional convolutional neural networks (CNNs) to transformer-based models.

Background and Motivation

Visual segmentation involves partitioning visual data into distinct segments that have real-world applications such as in autonomous driving and medical analysis. Historically, methods relied on CNN architectures, but the development of transformers has led to significant breakthroughs. Originally designed for natural language processing, transformers have outperformed prior approaches in vision tasks due to their robust self-attention mechanisms, which model global context more effectively.

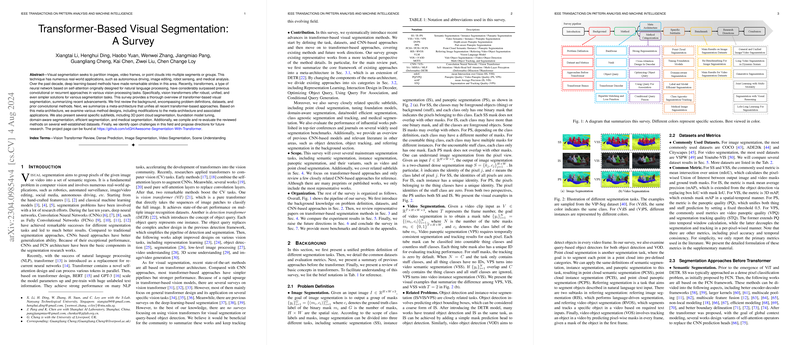

Meta-Architecture and Methodology

A core contribution of the paper is the presentation of a unified meta-architecture for transformer-based segmentation methods. This architecture encompasses a feature extractor, object queries, and a transformer decoder. The implementation showcases how object queries operate as dynamic anchors, simplifying traditional detection pipelines and eliminating the need for complex hand-engineered components.

Advancements and Techniques

The survey categorizes the literature into:

- Strong Representations: Enhancements in vision transformers and hybrid CNN-transformer models have been explored to improve feature representation. Self-supervised learning methodologies have further strengthened these models, enabling better performance on diverse segmentation tasks.

- Cross-Attention Design: Innovations in cross-attention mechanisms have accelerated training processes and improved detection accuracy. Variations such as deformable attention have been developed to handle multi-scale features efficiently.

- Optimizing Object Queries: Approaches introducing positional information and additional supervision have been implemented to accelerate convergence rates and enhance accuracy.

- Query-Based Association: Transforming object queries into tokens for instance association has proven effective for video segmentation tasks like VIS and VPS, promoting efficient instance matching across frames.

- Conditional Query Generation: This technique adapts object queries based on additional inputs such as language, facilitating tasks like referring image segmentation.

Subfields and Specific Applications

The authors also investigate subfields wherein transformers have been employed:

- Point Cloud Segmentation: Transformers are explored for their capability to model 3D spatial relations in point clouds.

- Tuning Foundation Models and Open Vocabulary Learning: Techniques to adapt large pre-trained models for specific segmentation challenges, including zero-shot learning scenarios, are discussed.

- Domain-aware Segmentation: Approaches like unsupervised domain adaptation highlight the challenges of transferring models between different domains.

- Label Efficiency and Model Efficiency: The ability to reduce the dependence on large labeled datasets and deploying segmentation models on mobile devices are examined.

Benchmark and Re-benchmarking

Performance evaluations across several benchmark datasets reveal Mask2Former and SegNext as leading techniques in different segmentation contexts. The paper delineates standard practices and emphasizes the impact of specific architectural choices on segmentation outcomes.

Future Directions

The survey suggests several avenues for future work, including:

- The integration of segmentation tasks across different modalities through joint learning approaches.

- The pursuit of lifelong learning models that can adapt to evolving datasets and environmental conditions.

- Enhanced techniques for handling long video sequences in dynamic, real-world scenarios.

Conclusion

This survey serves as a foundational reference for researchers aiming to explore transformer-based visual segmentation further. It encapsulates the transformative power of transformers in visual data processing, offering insights into both the practical implementations and theoretical advancements that fuel ongoing innovation in the field.