Exploring Retrieval-Augmented LLMs (RALMs): A Comprehensive Survey

Overview

Retrieval-Augmented LLMs (RALMs) have been making significant strides in enhancing the capabilities of traditional LLMs (LMs) by integrating external knowledge. This survey dives deep into the methodologies surrounding RALMs, covering both the technical paradigms and a variety of applications.

The Essence of RALMs

At their core, RALMs aim to address the limitations of conventional LMs, which include struggles with knowledge retention, and context-awareness, by augmenting their responses with externally retrieved information. This capability allows LMs to produce more accurate and contextually relevant outputs.

Key Components and Interactions

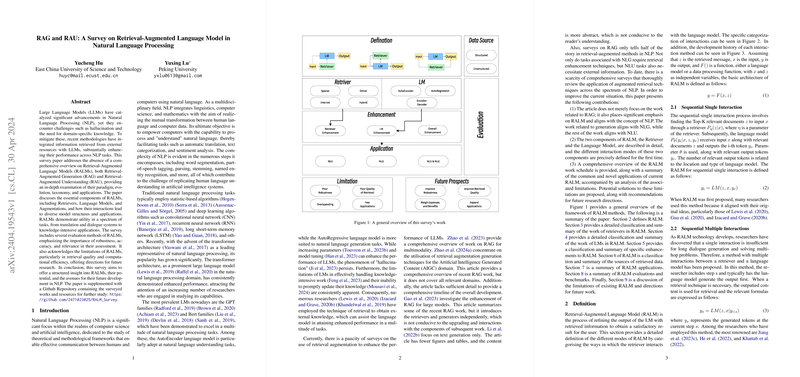

RALMs consist primarily of three components:

- Retrievers: These are tasked with fetching relevant information from external databases or the internet based on the input query.

- LLMs: The core AI that generates or processes language based on both the query and the information retrieved.

- Augmentations: Techniques that enhance the retriever and LLM's performance, such as filtering irrelevant information and adjusting response generation based on context.

The interaction between these components can be classified mainly into three types:

- Sequential Single Interaction: Information is retrieved once and then used for generating the entire response.

- Sequential Multiple Interactions: Multiple rounds of retrieval and generation occur, refining the response progressively.

- Parallel Interaction: Retrieval and language processing occur simultaneously and independently, and their results are combined to produce the final output.

Applications Across Fields

The utility of RALMs spans various NLP tasks:

- Translation and dialogue systems benefit from real-time access to updated translation databases and conversationally relevant data.

- Knowledge-intensive applications like question answering and text summarization see improvements through access to broad and detailed information.

Evaluation Techniques

Correctly assessing the performance of RALMs is crucial. The evaluation focuses on aspects like:

- Robustness: How well the model performs under different conditions and input variations.

- Accuracy: The correctness of the information retrieved and its relevance to the query.

- Relevance: How contextually appropriate the retrieved information and generated responses are.

Current Challenges and Future Directions

Despite their advancements, RALMs face several challenges:

- Retrieval Quality: Ensuring high-quality, relevant information is retrieved consistently.

- Computational Efficiency: Balancing the computational load, especially when multiple interactions or real-time responses are required.

- Adaptability: Extending the applications of RALMs to more diverse fields and tasks beyond typical NLP applications.

Looking forward, improving the interplay between retrievers and LLMs, refining augmentation techniques, and exploring new applications in areas like automated content moderation or personalized learning could further enhance the effectiveness and scope of RALMs.

Conclusion

RALMs represent a dynamic and evolving field within AI research, offering significant potential to expand the abilities of LLMs by integrating external knowledge. As these systems develop, they promise to bring us closer to creating AI that can understand and interact with human language with unprecedented depth and relevance. The continued innovation in this area is likely to yield even more sophisticated AI tools, transforming our interaction with technology.