CLIP Surgery for Enhanced Explainability and Open-Vocabulary Performance

The research conducted by Li et al. presents an innovative approach to improving the explainability of Contrastive Language-Image Pre-training (CLIP) models, particularly addressing issues related to opposite visualization and noisy activations. Their proposed method, termed "CLIP Surgery," involves modifications to both the model's inference architecture and feature processing, yielding significant improvements in multiple open-vocabulary tasks.

Key Findings and Methodology

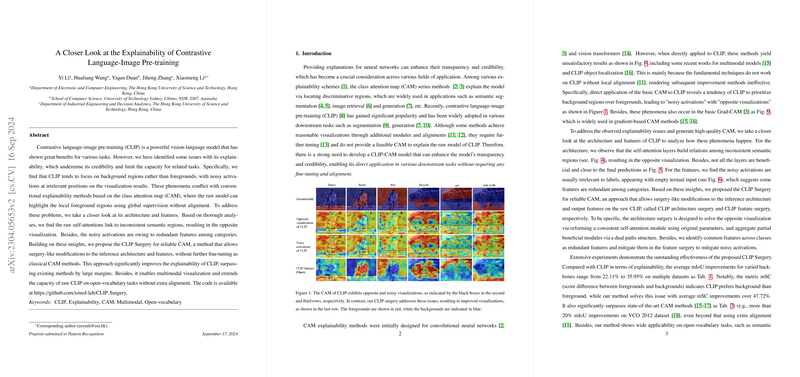

The investigation identifies two primary challenges with CLIP's explainability. First, the model often prefers background regions over foregrounds, contradicting human interpretation. Second, noisy activations can obscure relevant visualizations. Through detailed analysis, the authors pinpoint the parameters in the self-attention modules that induce these problems. Specifically, these parameters erroneously focus on semantically opposite regions, and redundant features are identified as the cause of noisy activations.

To address these issues, CLIP Surgery is introduced with two main components:

- Architecture Surgery: This involves "dual paths," a novel approach that reformulates the self-attention mechanism within the model by employing v-v self-attention layers. This transformation corrects attention map inconsistencies and enables more accurate visualization.

- Feature Surgery: This component aims to eliminate redundant features to reduce noise in activation maps. By calculating and removing redundant feature contributions, the method significantly enhances the clarity and relevance of visual explanations.

Experimental Results

The experiments demonstrate the efficacy of CLIP Surgery across various settings:

- Explainability: Significant improvements are recorded in terms of mIoU and mSC metrics across multiple datasets, showcasing the enhanced ability of the model to align visualizations with human perceptual expectations.

- Open-Vocabulary Tasks: The approach shows compelling results in open-vocabulary semantic segmentation and multi-label recognition. For instance, CLIP Surgery yields a 4.41% improvement on the mAP for multi-label recognition on NUS-Wide and surpasses state-of-the-art methods on Cityscapes segmentation by 8.74% in mIoU.

- Interactive Segmentation: When integrated with models like the Segment Anything Model (SAM), CLIP Surgery provides high-quality visual prompts without manual input, demonstrating a practical application in generating segmentation points directly from text.

Implications and Future Directions

The paper underscores the potential of enhancing multimodal models like CLIP for better transparency and performance in diverse applications. The authors speculate on broader implications for AI, suggesting that such enhancements in model explainability could improve trust and usage scope in critical fields such as medical diagnostics and autonomous driving.

Looking forward, further developments might include adapting CLIP Surgery to other vision-language tasks and exploring integration with other model architectures. Additionally, future work could investigate the training process of CLIP more closely to address identified redundancies from the outset, potentially leading to models that are inherently more interpretable and efficient.

The research presents a significant step forward in the domain of model explainability and task performance, paving the way for more robust and human-aligned AI systems.