Emergent Autonomous Scientific Research Capabilities of LLMs

The paper presents an investigation into the autonomous scientific research capabilities of LLMs, specifically through the development of an Intelligent Agent system. The Agent, utilizing multiple LLMs such as OpenAI's GPT-3.5 and GPT-4, demonstrates proficiency in the autonomous design, planning, and execution of scientific experiments. This system integrates various capabilities of LLMs, allowing it to interact with tools, pursue internet queries, execute robotic experimentations, and synthesize different pieces of data and reactions, thereby illustrating potential advancements in automated scientific research.

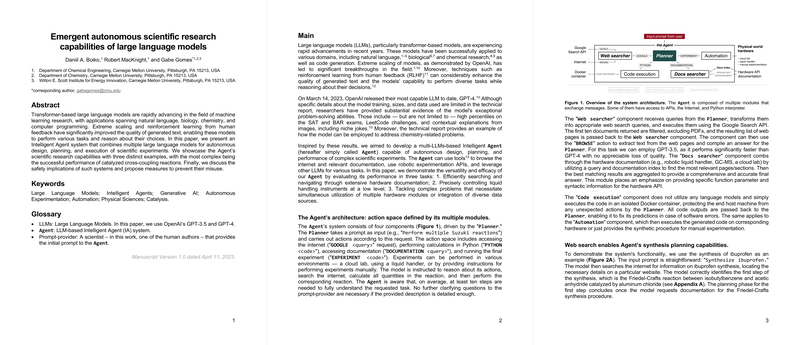

The architecture of the Agent consists of four components: the Planner, Web searcher, Docs searcher, and Code execution modules, with each contributing distinct functionalities essential for conducting scientific experiments. The Planner coordinates the entire process, from receiving prompts to performing necessary experimentations, while the Web searcher and Docs searcher efficiently manage the retrieval of relevant information. The Code execution component enhances operational safety by running tasks in isolated environments to mitigate risks of unexpected maneuvers by the Planner.

One notable application of the Intelligent Agent was demonstrated through its capability to plan and execute synthetic chemical experiments, including the synthesis of complex compounds such as ibuprofen, aspirin, and aspartame. Furthermore, the system's proficiency in synthesis planning was showcased through its ability to identify and plan Suzuki cross-coupling reactions, a critical class of chemical reactions. The ability to autonomously navigate through synthesis planning signifies a step forward in automation in chemical research and could enhance research operations significantly.

The system also highlights an intrinsic balance between the efficiency and practicality of LLM-driven experimentation and the associated safety implications. The Intelligent Agent's robust design enables it to autonomously conduct experiments and computations. However, it also inherently raises concerns about potential misuse, particularly in synthesizing controlled substances or chemical warfare agents. The current ability to refuse certain experimental applications, exemplified by its restraint in executing harmful syntheses, indicates a necessary, ongoing focus on ethical and safety considerations in experimental AI applications.

Future prospects of integrating LLMs into autonomous scientific research introduce opportunities for further enhancing experimental efficacy and precision across various scientific domains. Nonetheless, as the paper concludes, there is a compelling necessity for further engagement from the AI community to collaboratively develop comprehensive guardrails that responsibly govern LLM capacities, particularly in the context of dual-use concerns.

In conclusion, this paper presents a significant stride in the field of autonomous scientific experimentation using AI, illustrating substantial applications and capabilities while acknowledging and addressing the imperative for stringent safety and ethical frameworks. The development of LLM-based Intelligent Agents stands as a testament to the evolving landscape of AI in scientific endeavors, heralding profound implications for future research methodologies and automated experimentation tools.