Overview of "Baize: An Open-Source Chat Model with Parameter-Efficient Tuning on Self-Chat Data"

Introduction

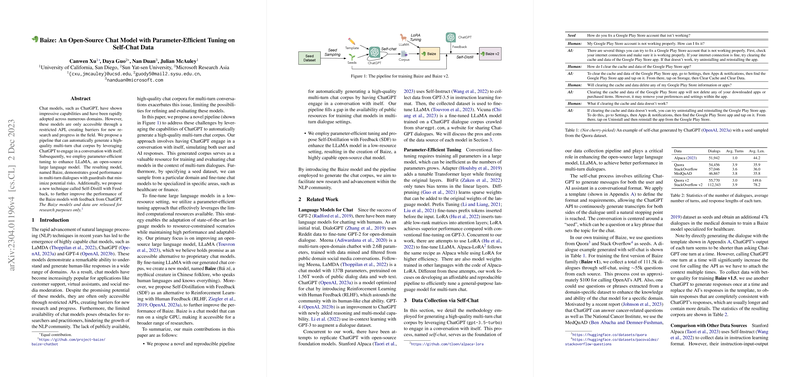

The paper presents Baize, an open-source chat model designed to enable easier access and development within the NLP community. The restriction on existing chat models accessible only through limited APIs creates a bottleneck for research progress. Baize addresses this by generating a multi-turn chat corpus using ChatGPT's self-dialogue, then employing parameter-efficient tuning methods to enhance the LLaMA model, culminating in a capable alternative to proprietary chat systems.

Methodology

The methodology primarily focuses on two innovative stages: data collection and model training.

- Data Collection through Self-Chat:

- A novel pipeline is proposed where ChatGPT engages in self-dialogue to generate multi-turn chat data. This process involves using a specific template to simulate user and AI interactions based on a seed dataset from platforms like Quora and Stack Overflow.

- The pipeline allows for specialization by sampling domain-specific seeds, demonstrated in creating a healthcare-focused Baize model.

- Parameter-Efficient Tuning:

- Baize leverages Low-Rank Adaptation (LoRA) to fine-tune the LLaMA model effectively. LoRA reduces the computational requirements by updating only low-rank matrices, making the training process viable on limited hardware resources.

- The paper introduces "Self-Distillation with Feedback" (SDF), a refinement method using ChatGPT's feedback to further enhance Baize's performance without requiring extensive computational load, an alternative to traditional Reinforcement Learning with Human Feedback.

Experimental Results

Baize is evaluated against existing models like Alpaca and Vicuna, highlighting its competitive performance. Notable results indicate that Baize v2's performance aligns closely with models such as Vicuna-13B, demonstrating its capability as a resource-efficient alternative.

- The model's efficacy is validated using GPT-4 scoring and evaluated on standard tasks via the LM Evaluation Harness.

- Comparisons show Baize's proficiency across various domains, such as coding and healthcare, by employing different specialized datasets.

Implications and Future Directions

The release of Baize and its dataset under research-friendly licenses fosters the development of open-source chat applications. The parameter-efficient model training and public availability encourage wider participation and innovation in NLP research.

Future work could explore enhancing the diversity and quality of self-chat data, further improving Baize's capabilities. The paper posits SDF as a potent tool that may extend beyond ChatGPT to human feedback scenarios, potentially leading to further refinements in AI LLMs.

Conclusion

This paper makes significant strides in democratizing chat model research through an accessible, efficient, and adaptable approach. By utilizing self-dialogue data generation and parameter-efficient tuning, Baize emerges as a valuable resource for advanced research and potential application across diverse domains. The methodologies promise continued advancement in NLP capabilities, enhancing both theoretical exploration and practical deployment.