HuggingGPT: Integrating LLMs with AI Models

The research paper "HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face" presents a novel approach for leveraging LLMs like ChatGPT to orchestrate various AI models from platforms like Hugging Face, aiming to address complex AI tasks across different domains and modalities. The primary focus of this work is to position LLMs as controllers that manage and integrate expert models through a language-based interface, thus contributing towards achieving artificial general intelligence.

Methodology

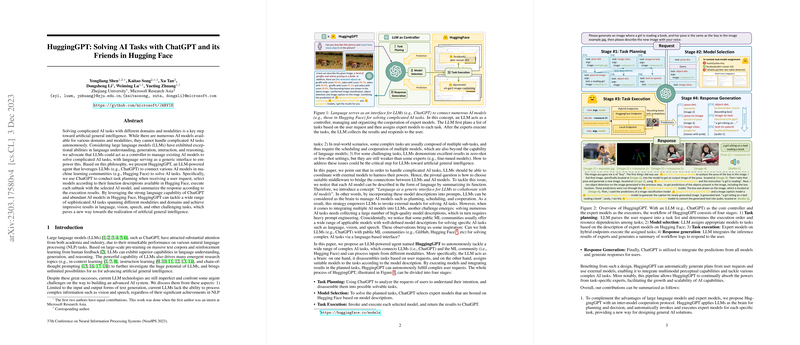

The authors propose HuggingGPT, an LLM-powered agent that tackles AI tasks by parsing user requests into subtasks, selecting appropriate expert models, executing these models, and synthesizing the results for user response. The system is organized into four distinct stages:

- Task Planning: HuggingGPT utilizes LLMs to analyze and deconstruct user requests into a sequence of tasks while determining dependencies and execution order. This step relies on specific task templates and demonstration-based parsing to guide the LLMs effectively.

- Model Selection: The task-model assignment is performed by comparing user requests with available model descriptions on Hugging Face, using an in-context mechanism to filter and rank candidate models based on relevance and popularity.

- Task Execution: Each selected model is invoked with the appropriate arguments. The system handles resource dependencies dynamically, ensuring subtasks are executed in the correct order and with the necessary inputs from previous tasks.

- Response Generation: Finally, the LLM integrates findings from all tasks, forming a comprehensive response that addresses the initial user request.

Experimental Evaluation

The paper presents extensive qualitative and quantitative evaluations to validate HuggingGPT's capabilities. It demonstrates impressive performance across various modalities, including language, vision, and speech. Specific standard metrics such as precision, recall, and F1 scores were utilized to measure the effectiveness of task planning and execution. The system is also tested in complex scenarios involving multi-turn dialogues and tasks with cross-modality dependencies, illustrating its flexibility and robustness. Human evaluation further corroborates the machine's efficacy, highlighting the system's potential to deliver coherent and accurate multi-modal responses.

Implications and Future Research

HuggingGPT signifies a shift towards integrating LLMs with specialized models, enabling them to function as a central controller for sophisticated AI systems. This approach holds promise not only for enhancing the interoperability of AI components but also for extending the reach of LLMs beyond traditional text domains. Future research may focus on optimizing task planning processes, expanding the range of supported tasks, and improving system efficiency to mitigate the inherent computational overhead from multiple LLM interactions. Moreover, exploring more sophisticated ways to harness and manage model descriptions from AI communities could further refine the process of model selection and integration.

In conclusion, HuggingGPT offers a compelling framework for leveraging the combined strengths of LLMs and domain-specific expert models, paving the way for more advanced and scalable AI solutions. This work lays a foundation that could inspire future developments in the pursuit of artificial general intelligence, providing a strategic pathway for integrating diverse AI capabilities.