Understanding MM-ReAct: A Framework for Multimodal Reasoning with ChatGPT

The paper "MM-ReAct: Prompting ChatGPT for Multimodal Reasoning and Action" introduces a novel framework designed to enhance ChatGPT's capabilities by integrating it with a suite of vision experts. This paradigm, termed MM-ReAct, allows for multimodal reasoning and action, aimed particularly at tackling advanced visual understanding tasks that surpass the abilities of existing vision and vision-LLMs.

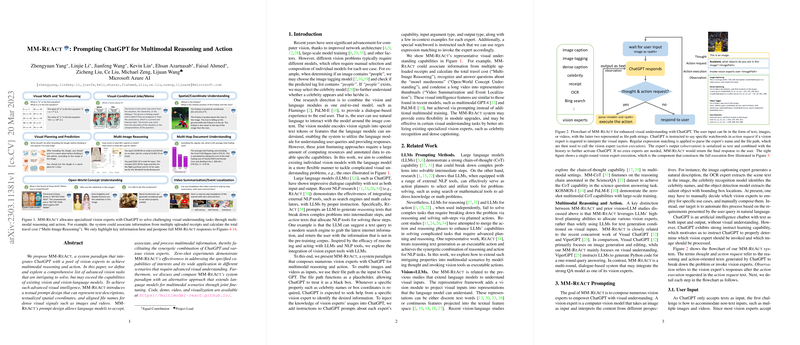

Framework and Methodology

MM-ReAct is centered on a system architecture that couples ChatGPT with a diverse pool of vision models, called vision experts, to empower comprehensive image and video analysis. The paper details a meticulous prompt design that enables ChatGPT to process textual descriptions, spatial coordinates, and aligned file names for diverse visual signals. By doing so, the system effectively extends the interaction capabilities of LLMs into the visual domain, ensuring a flexible and modular approach to visual reasoning.

A notable aspect of MM-ReAct is its "action request" mechanism. When a query requires visual interpretation, ChatGPT generates specific prompts that invoke relevant vision experts to assess and interpret the visual content. These experts then provide structured text outputs that ChatGPT can further process to reach a final conclusion or generate a user response.

Experimental Results

The paper demonstrates MM-ReAct's capabilities through zero-shot experiments across various tasks, such as document understanding, video summarization, and open-world concept recognition. The system efficiently associates information across multiple images, performs spatial reasoning, and interprets complex visual scenarios without requiring additional multimodal training. These results highlight MM-ReAct's potential to bypass the substantial computational resources and annotated data that joint fine-tuning methods often demand.

Comparative Analysis

The paper compares MM-ReAct with existing systems like PaLM-E and Visual ChatGPT, noting that while these models blend modalities through training, MM-ReAct achieves similar functionality via strategic prompting and task-specific integration of specialized models. This distinction underlines MM-ReAct's flexibility and resource efficiency, positioning it as a compelling alternative in the field of multimodal AI systems.

Implications and Speculative Future Directions

MM-ReAct's framework carries substantial implications for both practical applications and theoretical advancements in AI. Practically, it offers a scalable method to extend the utility of LLMs into visual domains, potentially benefiting fields like content analysis, augmented reality, and human-computer interaction. Theoretically, this approach could lead to further explorations into the modular composition of AI systems, prompting new designs in flexible AI frameworks.

Looking forward, the paper suggests further integration of modalities like audio, suggesting an iterative and expansive growth of this system's capabilities. Additionally, by keeping the architecture open and modular, MM-ReAct lays the groundwork for continuous improvement and adaptability, crucial traits for future AI-driven solutions.

In conclusion, "MM-ReAct" presents a forward-thinking approach to multimodal reasoning, effectively bridging the capabilities of LLMs with specialized vision tools. This paper provides vital insights and a robust foundation for further exploration into multimodal AI systems, marking a significant stride in the evolution of AI research and application.