Analysis of Detector Performance and Total Variation in AI Text Generation

The paper presents a comprehensive examination of AI-generated text detection, focusing on the framework for analyzing the performance of detectors and the implications of total variation (TV) on the area under the receiver operating characteristic curve (AUROC). The problem of detecting AI-generated text is nuanced and requires intelligent models to distinguish between human-generated and AI-generated content effectively. This paper builds on the premise that the total variation, a statistical measure of distribution divergence, plays a crucial role in bound-setting for detectors.

Theoretical Contributions

The authors derive a mathematical upper bound for the AUROC value of any given detector based on the total variation between the machine-generated text distribution () and human text distribution (). They assert, through formal proof, that the AUROC is strictly bounded by the expression:

This theoretical cornerstone of the paper underscores the inherent limitation of text detectors, as precise discrimination is suppressed by the degree of overlap (TV) between the two distributions.

Experimental Analysis with GPT-3 Models

To empirically validate their theoretical findings, the authors conducted experiments using various editions of the GPT-3 LLMs: Ada, Babbage, and Curie. The models' outputs were compared against the WebText and ArXiv datasets to estimate the total variation and consequently analyze detection performance across varying text lengths. The experimental results indicate the model with superior generative capabilities, Curie, showed lower total variation scores compared to less powerful models like Ada, suggesting its outputs were more akin to human text.

When applied to more niche datasets like ArXiv abstracts, the total variation once again decreased in more capable models, further cementing the idea that with increased LLM efficacy, the detection of AI-generated texts becomes increasingly challenging.

Implications for AI Text Detection

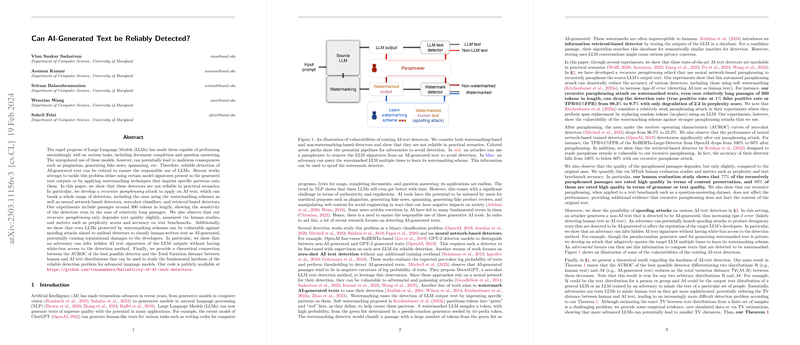

The implications of this research extend to crafting strategies for robust AI-text detectors that can operate within the mathematical limits detailed. The bound on AUROC implies a fundamental challenge in distinguishing sophisticated LLM outputs, motivating ongoing improvements in detection methodologies such as neural network-based detectors, zero-shot approaches, and watermarking technologies. Additionally, the increasing similarity between human and AI text emphasizes the need for novel detection strategies, as current methodologies may falter with advancing LLMs.

Future Considerations

The paper also anticipates future advances in both the capability of model generators and adversarial approaches to overcome detector strategies. Improved paraphrasing models and strategic use of prompts that evoke low entropy outputs could pose significant threats to detector accuracy. Therefore, future research directions could focus on developing adaptive detection paradigms that preemptively counteract these sophisticated evasion techniques.

In conclusion, this paper provides a mathematical and empirical framework for understanding the limitations of AI-generated text detectors in the presence of high-performing LLMs. Its contributions highlight the critical intersection of statistical metrics and practical AI deployment, offering valuable insights for advancing detection methodologies in response to ever-evolving generative technologies.