Introduction

The proliferation of LLMs such as GPT-3.5 Turbo has revolutionized various industries by automating content creation. However, this also raises concerns about distinguishing between human and machine-generated text. The integrity of digital communication relies on our ability to make such distinctions.

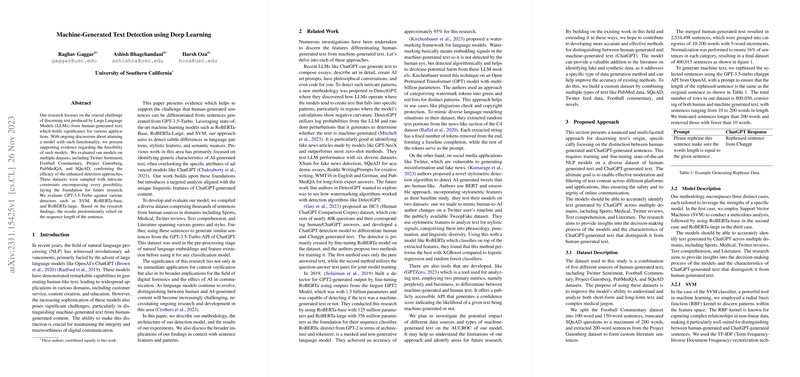

Related Work

Previous efforts in machine-generated text detection have centered on identifying general characteristics of AI-generated content. Some researchers have proposed watermarking techniques to embed detectable signals in LLM output. Others focused on stylometric detection to differentiate AI-produced tweets by analyzing linguistic features. Additionally, tools like GPTZero utilize metrics such as perplexity and burstiness to identify machine-generated text.

Proposed Approach

The paper proposes a comprehensive approach to discriminate between human and LLM-generated sentences. The researchers build a dataset with a broad spectrum of sentences from different domains. By subjecting this dataset to deep learning models like SVM, RoBERTa-Base, and RoBERTa-Large, the team seeks to identify subtleties in language patterns exclusive to AI-generated content.

Model Description

The SVM model uses a radial basis function kernel with feature representation through TF-IDF to classify the text. While it provides a solid baseline, it is surpassed by RoBERTa-based architectures for more complex tasks. RoBERTa-Base and RoBERTa-Large models, empowered with additional layers, leverage their deep understanding of language context to outperform SVM, especially as sentence length increases.

Experimental Setup

The paper categorizes the dataset across different sentence length ranges to analyze model performance in fine detail. It records the Area Under the Receiver Operating Characteristic curve for each model, providing insights into each model's capability to process varying textual complexity.

Results and Discussion

The experiments demonstrate that RoBERTa models are particularly efficient, with RoBERTa-Large showing dominance in handling complex and longer sentences. SVM performs well but lacks the sophistication of RoBERTa models. The findings underscore the effectiveness of the proposed models in addressing the challenge of determining text origins.

Conclusion and Future Work

The paper validates the possibility of distinguishing between human and ChatGPT-generated text. For future advancements, incorporating a broader dataset and more LLMs may enrich detection capabilities. Exploring other methodologies, such as zero-shot or one-shot learning systems, could also yield more resource-efficient classifiers.

In summary, this investigation into machine-generated text detection advances our understanding of how deep learning can be leveraged to uphold the authenticity of digital communication in the face of increasingly sophisticated AI LLMs.