Muse: An Efficient Text-To-Image Transformer Model for High-Fidelity Image Generation

Introduction

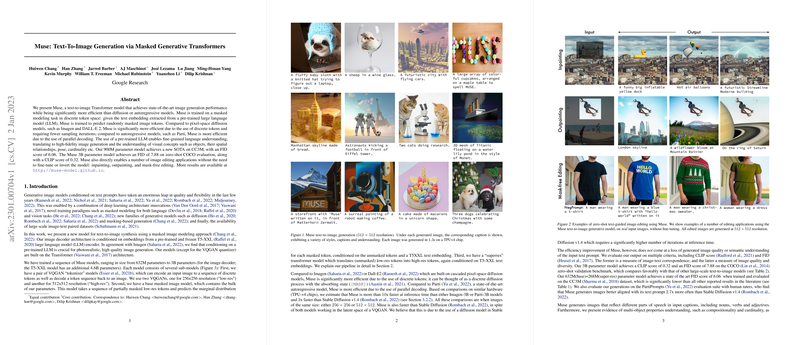

Recent advancements in text-to-image synthesis have been marked by innovative approaches that combine deep learning architectures with novel training paradigms and generative models. Muse, introduced by researchers at Google, epitomizes these advancements through its effective use of masked generative transformers for text-to-image generation. This model distinctively leverages a pre-trained LLM, specifically T5-XXL, for extracting text embeddings, which significantly contributes to its ability to generate photorealistic and semantically intricate images. Its architecture is built upon the Transformer model and includes a suite of Muse models with parameters ranging from 632M to 3B, showcasing a scalable approach toward high-resolution image generation.

Key Contributions and Architectural Overview

Muse's architecture stands out for its use of discrete token-based representations of images, facilitated by VQGAN tokenizers, and its adoption of a masked modeling approach for image token prediction. This methodological choice not only enhances the efficiency of the model but also positions Muse advantageously against other contemporary models in terms of inference speed and image quality. The model consists of three primary components:

- VQGAN Tokenizers: These play a pivotal role in encoding and decoding images to and from sequences of discrete tokens, enabling the model to manipulate images in a tokenized form that captures semantic and stylistic nuances.

- Base Masked Image Model: It predicts the distribution of masked image tokens based on unmasked tokens and text embeddings from T5-XXL, laying the groundwork for generating initial low-resolution image predictions.

- Super-Resolution Transformer Model: This model upsamples the tokenized image representations to higher resolutions, enriching the generated images with finer details.

Muse introduces several innovative techniques, including variable masking rates for training, classifier-free guidance to balance diversity and fidelity, and an iterative parallel decoding at inference time, which notably reduces the number of required decoding steps.

Evaluation and Results

Muse achieved state-of-the-art performance on the CC3M dataset, with its 900M parameter model reaching an impressive FID score of 6.06. Moreover, the model demonstrated remarkable speed, outpacing other models like Imagen and DALL-E 2 both in terms of efficiency and generated image quality. A notable aspect of Muse’s evaluation is its strong performance on the COCO dataset in a zero-shot setting, where it also showcases competitive FID and CLIP scores.

Practical Implications and Future Developments

Muse's capability extends beyond mere image generation to facilitate a variety of image editing applications such as inpainting, outpainting, and mask-free editing, directly leveraging its underlying generative mechanism without the need for additional tuning. This broadens the practical utility of the model in creative and design contexts, where such editing functionalities can be immensely valuable.

Looking ahead, the promising results achieved by Muse open avenues for further exploration in enhancing the efficiency of text-to-image models. The architectural innovations presented in Muse, including its token-based approach and masked modeling strategy, set a new benchmark for future research in the field. Moreover, the model’s adeptness at zero-shot image editing tasks suggests potential for expanding its capabilities in more interactive and user-driven applications.

Conclusion

In summary, Muse represents a significant advancement in the domain of text-to-image synthesis, marked by its innovative use of a pre-trained LLM for text understanding, its efficient and scalable Transformer-based architecture, and its state-of-the-art performance in image generation and editing tasks. As the field continues to evolve, the foundational principles demonstrated by Muse will undoubtedly inspire and guide future efforts to bridge the gap between textual descriptions and visual representations.