ProgPrompt: Generating Situated Robot Task Plans Using LLMs

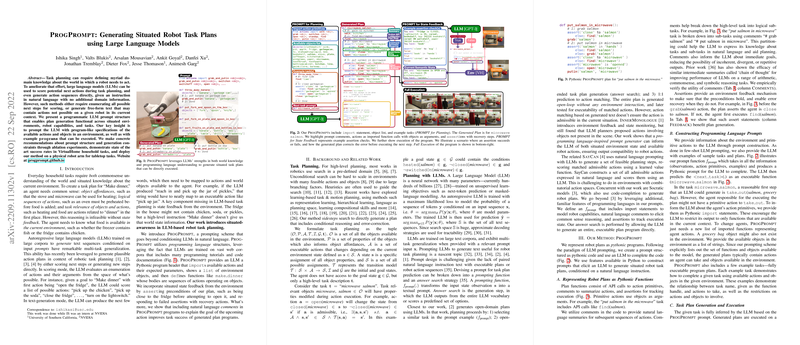

In the paper "ProgPrompt: Generating Situated Robot Task Plans Using LLMs," the authors introduce a method to enhance robotic task planning through a novel prompting approach. This approach leverages LLMs by structuring prompts using program-like syntax, thereby improving the model's ability to generate executable action sequences for robots within situated environments.

Overview of the Method

The proposed method, ProgPrompt, provides LLMs with programming language structures, such as Pythonic code, to guide the generation of robotic task plans. The technique combines elements of code syntax, such as import statements, object lists, and example task definitions, to condition the LLM effectively. This allows the system to draw on both the model's learned understanding of natural language and its ability to interpret and generate code, thus bridging the gap between abstract task instructions and concrete, executable plans.

Experimental Evaluation

The research presents comprehensive experimental evaluations in both simulated and physical environments. In Virtual Home, a simulated environment for household tasks, ProgPrompt demonstrates significant improvements over prior methods by ensuring that the generated plans are both executable and contextually appropriate. The paper details success rates, executability measures, and goal condition recall, showcasing a method that outperforms existing baselines across these metrics.

When deploying ProgPrompt on a physical Franka-Emika Panda robot, the approach proves adept at grounding LLM-generated plans in real-world tasks. Notably, the method maintains high success rates even when distractor objects are present, demonstrating robustness to environmental variability.

Key Insights and Contributions

- Prompt Structure: By using code-like prompt structures, the method guides LLMs to perform effective task decomposition and action sequencing. This design turns high-level task descriptions into detailed, executable plans, encompassing program comments and assertions for tracking task progress and handling contingencies.

- Enhancement of LLM Capabilities: ProgPrompt leverages the intrinsic capabilities of LLMs to comprehend both natural language and programming language syntax, thus enhancing the models' ability to generate coherent and robust task plans.

- Generalization Across Environments: The flexibility of ProgPrompt enables its adaptation to various environments and tasks without requiring significant reengineering of the prompting mechanism, as evidenced in the evaluations with different virtual environments and a real-world robot.

Implications and Future Directions

The implications of ProgPrompt extend into both practical and theoretical domains. Practically, the method offers a scalable solution for robotic task planning that can easily adapt to different environments and robots. Theoretically, it paves the way for further integration of LLM capabilities with robotic systems, potentially exploring more complex programming constructs to accommodate richer task requirements.

Future research can explore incorporating real-valued measurements, complex control flows, and other programming features to enhance the precision and reliability of generated plans. Additionally, exploring the efficiency of different LLM architectures, like Codex or GPT variants, within this framework may yield further performance gains.

In conclusion, ProgPrompt illustrates a significant advancement in using LLMs for robot task planning, offering a flexible, robust, and innovative approach that aligns well with current trends in AI research and robotic automation.