Integrating LLMs with Answer Set Programming for Enhanced Robotic Task Planning

Introduction

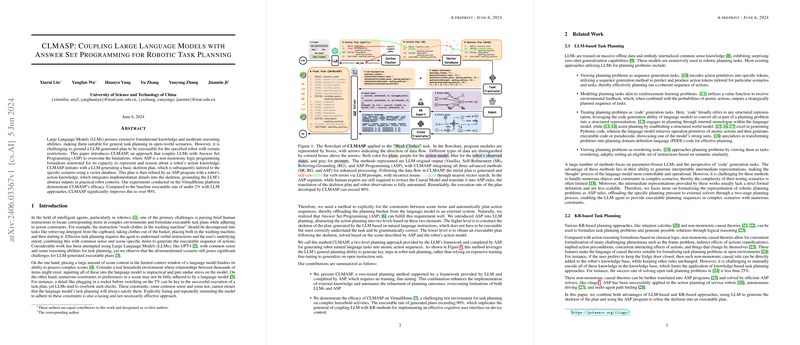

The paper, "CLMASP: Coupling LLMs with Answer Set Programming for Robotic Task Planning," focuses on the intrinsic challenges in robotic task planning within complex environments. Traditional planning techniques often fall short in addressing the constraints and intricacies associated with such settings. This work proposes an integrated framework, CLMASP, which leverages the capabilities of LLMs and Answer Set Programming (ASP) to generate and refine executable task plans for robots operating in intricate environments like household settings.

Methodological Overview

The approach commences with natural language task descriptions, which are parsed and initially planned by LLMs such as GPT-3.5 or GPT-4. The preliminary plan, termed the "skeleton plan," captures the high-level steps necessary for task completion but lacks the granularity required for execution given specific constraints and environmental variables. The skeleton plans are subsequently refined through ASP.

Key Steps in CLMASP:

- Skeleton Plan Generation:

- Utilization of Chain-of-Thought (CoT) prompting to guide the LLMs in generating initial task sequences.

- Iterative syntactic self-refinement (SR) that ensures the generated plans are grammatically correct and format-compliant.

- Semantic Referring-Grounding (RG) employing vector databases to ensure the objects in the plans refer to actual entities within the environment.

- ASP-Based Plan Refinement:

- Encoding of the skeleton plan, environmental states, and robot action models into ASP rules.

- Utilization of ASP to resolve constraints and to detail the steps required for execution, considering dependencies and task-specific prerequisites.

- Execution of the ASP program using the Clingo solver to produce final, executable plans.

Experimental Evaluation

The VirtualHome platform served as the experimental testbed, comprising diverse household environments and a wide array of tasks. The evaluation metrics included Executability (Exec) and Goal Achievement Rate (GAR).

Results:

- Executability: Baseline LLM methods yielded an executable rate of under 2%. The integration of ASP into the CLMASP framework dramatically increased this rate, achieving over 90% executability.

- Goal Achievement Rate: Both GPT models—3.5 and 4—showed significant improvements in GAR when supplemented with the ASP module, reaching around 71% for GPT-4 in the most advanced configuration.

Implications and Future Work

The strong numerical results underscore the substantial improvements in task planning when leveraging a hybrid approach combining LLMs and ASP. The implications of this work are multi-faceted:

- Theoretical: It demonstrates the viability of combining non-monotonic logic programming with modern LLMs to handle complex task planning scenarios. The incorporation of ASP addresses the limitations of LLMs in maintaining contextual and constraint-based nuances.

- Practical: The CLMASP approach showcases a robust method for deploying more reliable and context-aware robotic assistants in real-world applications, particularly in dynamic and constraint-rich environments.

Future developments should focus on:

- Scalability: Enhancing the framework to support even larger and more complex environments.

- Open-Source LLMs: Exploring the integration with open-source LLMs like Llama to mitigate computational and economic constraints posed by proprietary models.

- Automated ASP Code Generation: Streamlining the generation of ASP programs through ontology-driven methods and inductive logic techniques to further reduce the dependency on expert knowledge.

Conclusion

The "CLMASP: Coupling LLMs with Answer Set Programming for Robotic Task Planning" paper provides an innovative and practical solution to the challenges of robotic task planning in intricate settings. By effectively combining the generative strengths of LLMs with the precise and constraint-oriented capabilities of ASP, it offers a significant leap forward in producing executable and goal-oriented plans. The research opens up promising avenues for future work, particularly in enhancing flexibility, adopting open-source models, and automating logic-based planning refinements.