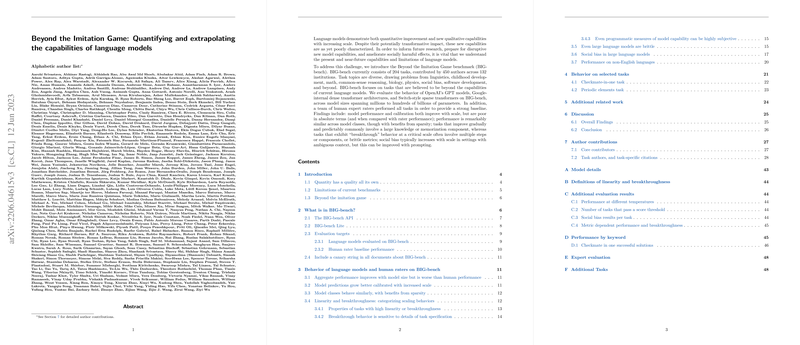

Beyond the Imitation Game: A Comprehensive Benchmark for LLMs with BIG-bench

Introduction

The capabilities of LLMs (LMs) evolve rapidly, continually setting new benchmarks that challenge our understanding of AI's potential. The introduction of the Beyond the Imitation Game (BIG-bench) benchmark seeks to address critical gaps in existing benchmarks for LLMs. BIG-bench stands out through its extensive inclusion of 204 diverse tasks spanning various domains such as linguistics, mathematics, commonsense reasoning, and even tasks like code debugging and chess move prediction. It aims to quantify model behaviors both qualitatively and quantitatively, offering a novel insight into the capabilities and limitations of modern LLMs across a broad spectrum of parameters.

Evaluation Methodology

The paper reports on evaluations conducted across models of varying complexities, including those from Google and OpenAI that range from millions to hundreds of billions of parameters. Notably, these evaluations include the use of dense transformers and sparse transformer architectures. The benchmark also incorporates a human expert baseline to provide context for the model's performance. In doing so, BIG-bench contributes significantly to the discourse on LM capabilities by not just focusing on task performance but also on the models' calibration, bias, and robustness to task presentation.

Key Findings and Implications

Performance Trends and Task Breakthroughs

One of the primary observations from the benchmark is the considerable improvement in performance correlating with model scale. Despite this trend, it's essential to note that all models, irrespective of their size, demonstrated considerable deficiencies when compared to expert human performance. The analysis uncovers instances of "breakthrough" behavior, where model performance on specific tasks improves dramatically beyond a certain model scale. This phenomenon indicates a nonlinear scaling behavior in LMs, especially in tasks involving multi-step reasoning or those with narrow success metrics.

Sensitivity to Task Framing

The benchmark elucidates the models' brittleness, highlighted by their performance fluctuation based on task framing. Such findings prompt a reevaluation of model robustness and the potential need for models that can generalize across various framings of essentially the same task.

Social Bias

A disconcerting finding is the amplification of social biases in models as they scale, especially in tasks set in broad or ambiguous contexts. This underscores the critical need for continued emphasis on ethical AI development practices, focusing on fairness and the mitigation of biases.

Language and Domain Coverage

BIG-bench showcases a pronounced performance disparity in tasks across different languages, particularly highlighting the models' underperformance in tasks involving low-resource languages. This gap accentuates the importance of inclusivity in data representation for training models that are truly global.

Future Directions

The insights from BIG-bench provide a roadmap for future research in LMs, emphasizing the importance of model calibration, the mitigation of biases, and the development of more robust models. Additionally, the emergence of breakthrough behaviors and the sensitivity to task framing underscore the need for continued exploration into model architectures and training procedures. Moreover, the performance gap in tasks involving low-resource languages and specific domains points to the need for a more inclusive approach in data procurement and model training.

Conclusion

BIG-bench marks a significant advancement in the pursuit of understanding LLMs' capabilities and limitations. By encompassing a wide range of tasks and evaluating models of varying scales, it delivers comprehensive insights into the current state of LMs. The findings highlight the complexities of model scaling, sensitivity to task framing, and the societal implications of model biases. As LMs continue to evolve, benchmarks like BIG-bench will be pivotal in guiding the development of more capable, equitable, and robust AI systems.