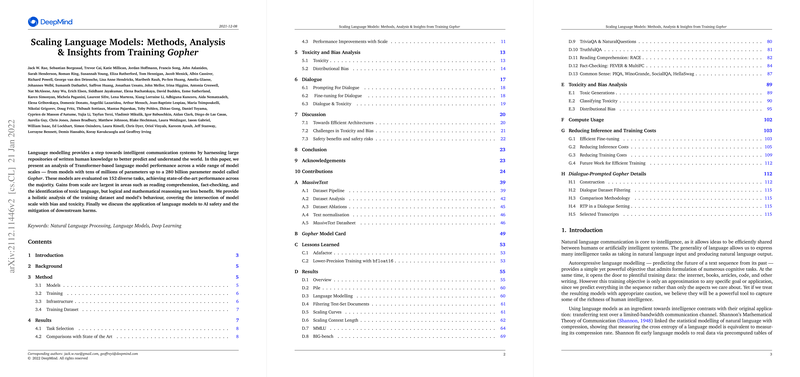

This paper (Rae et al., 2021 ) presents Gopher, a 280 billion parameter Transformer LLM, alongside a family of smaller models ranging from 44 million parameters, to analyze the effects of scaling LLMs. The models are trained using an autoregressive objective on a large, diverse dataset called MassiveText, totaling 10.5 TB of text after filtering. MassiveText is composed of curated web pages (MassiveWeb), books, news articles, GitHub code, C4 [raffel2020exploring], and Wikipedia. A key finding is that data quality and filtering (including repetition removal and deduplication) significantly impact downstream performance.

The Gopher models employ a standard Transformer architecture with a few modifications: RMSNorm [zhang2019root] instead of LayerNorm [ba2016layer] and relative positional encodings [dai2019transformer]. The models are trained for 300 billion tokens with a 2048-token context window using the Adam optimizer [kingma2014adam]. Training infrastructure leverages JAX [jax2018github] and Haiku [haiku2020github], utilizing TPUv3 chips [tpuacm] with a combination of data and model parallelism within TPU pods and pipelining across pods to handle the immense memory requirements (2.5 TiB for Gopher's parameters and optimizer state). The paper notes that bfloat16 precision was used for Gopher's parameters and activations, but subsequent analysis showed that maintaining a float32 copy of parameters in the optimizer state for updates improved stability and performance.

The models were evaluated on a diverse set of 152 tasks covering LLMing, reading comprehension, fact-checking, question answering, common sense, mathematics, logical reasoning, general knowledge, and academic subjects from benchmarks like MMLU [hendrycks2020measuring] and BIG-bench [bigbench].

Key findings from the evaluation results include:

- Overall Performance: Gopher achieves state-of-the-art performance compared to prior LLMs (including GPT-3 [gpt3], Jurassic-1 [jurassic], and Megatron-Turing NLG [Megatron-Turing]) on 100 out of 124 comparable tasks (81%).

- Impact of Scale: Scaling model size from 44 million to 280 billion parameters consistently improves performance across most tasks. Gains are particularly large in knowledge-intensive domains like reading comprehension (RACE-h, RACE-m, reaching near-human rater performance), fact-checking (FEVER), academic subjects (MMLU, where Gopher significantly outperforms GPT-3), and general knowledge.

- Limitations of Scale: The benefits of scaling are less pronounced for tasks requiring mathematical or logical reasoning (e.g., Abstract Algebra, High School Mathematics, Temporal Sequences from BIG-bench). This suggests that increasing model size alone may not be sufficient for breakthroughs in these areas.

- LLMing: On the Pile benchmark [pile], Gopher outperforms prior SOTA on 11 out of 19 datasets, showing improvements particularly on books and articles, which correlates with MassiveText's data composition. However, it underperforms on some datasets like Ubuntu IRC and DM Mathematics.

- Context Length: Using relative positional encodings and clamping the maximum relative distance allows Gopher (trained on 2048 tokens) to benefit from evaluation with longer contexts, particularly for articles and code.

The paper also provides a detailed analysis of model toxicity and bias, noting the importance of evaluating these aspects alongside performance gains:

- Toxicity Generation: When prompted with toxic inputs from the RealToxicityPrompts [gehman2020realtoxicityprompts] dataset, larger models tend to generate more toxic continuations, mirroring the input toxicity more closely than smaller models. However, unconditional generation toxicity remains low across scales, similar to the training data levels.

- Toxicity Classification: Model ability to classify toxicity in a few-shot setting (on CivilComments [nuanced_metrics]) increases with scale. However, even the largest model's performance is well below supervised SOTA classifiers. An analysis reveals unintended subgroup biases, with the model showing disparate performance and bias across different identity groups.

- Distributional Bias: Evaluations for gender-occupation stereotypes (using word probabilities and Winogender [rudinger201eter]) and sentiment bias towards different social groups (race, religion, country, occupation) show that increasing model size does not consistently reduce these biases. Templates and choice of demographic terms significantly impact measured bias.

- Dialect Perplexity: Perplexity is higher on African American-aligned Twitter data compared to White-aligned data, and this gap does not close with model scale, indicating disparate performance on different dialects.

- Evaluation Limitations: The authors highlight limitations of current toxicity and bias metrics, including classifier biases, brittleness of template-based evaluations, challenges in defining context-dependent harms, and the need for robust, application-specific metrics.

In the dialogue section, the paper explores interacting with Gopher:

- Dialogue Prompting: By using a curated prompt describing a helpful AI assistant, the standard LLM can emulate conversational behavior without fine-tuning. While this shows promise for factual Q&A and creative tasks, it is not robust and can still generate incorrect or harmful content, especially under adversarial prompting [perez2021redteaming]. Unlike general text generation, toxicity in Dialogue-Prompted Gopher responses tends to decrease or remain stable with scale, possibly due to the prompt's instructions for politeness.

- Dialogue Fine-tuning: Fine-tuning Gopher on a dialogue-specific subset of MassiveText did not yield a statistically significant preference in human evaluations compared to the Dialogue-Prompted version.

The discussion section covers future directions and challenges:

- Efficient Architectures: Training costs are dominated by linear layers. Future work should focus on more efficient architectures like Mixture-of-Experts [fedus2021switch] or retrieval-based models [borgeaud2021retrieval] to push scale further.

- Mitigation: Many harms are best addressed downstream through fine-tuning, monitoring, and sociotechnical methods, as safety requirements are often application-specific. However, robust downstream mitigation depends on further research.

- Safety Benefits: LLMs capable of nuanced communication are seen as potentially powerful tools for AI safety research, enabling humans to better supervise and align advanced systems through techniques like iterated amplification [christiano2018amplification] and recursive reward modeling [leike2018scalable].

The paper also details efforts to reduce training and inference costs:

- Efficient Fine-tuning: Fine-tuning the entire model generally yields the best performance for a given compute budget, although bias-only tuning or fine-tuning limited layers can be useful under memory constraints. Adafactor [shazeer2018Adafactor] was used for fine-tuning Gopher to reduce memory footprint.

- Inference Cost Reduction (Distillation, Pruning): Distillation of larger models to smaller ones and magnitude pruning were explored but showed limited success in achieving significant compression (beyond 20-30% for pruning) without a substantial performance drop for the general LLMing objective.

- Training Cost Reduction (Dynamic Sparsity, Reverse Distillation, Warm Starting): Dynamic sparse training did not provide significant FLOPs reduction. Reverse distillation (distilling a small teacher to a large student) offered only limited gains early in training. Warm starting (initializing a larger model with weights from a smaller one) was more successful, particularly depth-wise expansion by replicating layers, achieving comparable performance to training from scratch with up to 40% less compute in tested cases.

In conclusion, the work demonstrates that scaling Transformer LLMs and using high-quality, diverse data like MassiveText leads to significant performance gains across a wide range of tasks, particularly knowledge-intensive ones. However, limitations in reasoning tasks persist, and challenges related to toxicity, bias, and efficient scaling require further research and dedicated mitigation strategies.