Overview of Generative Image-to-Text Transformer (iVLM)

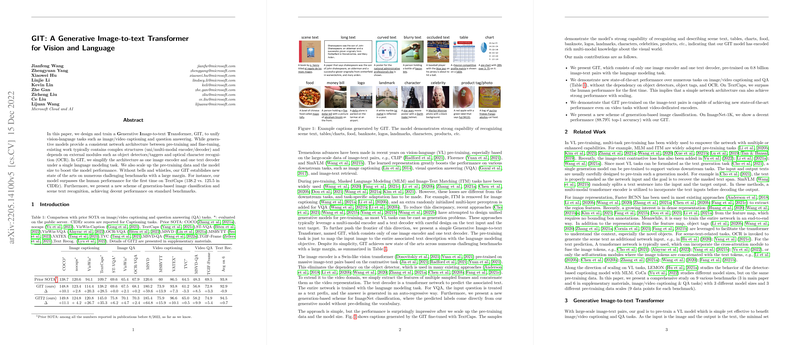

This paper introduces the Generative Image-to-Text Transformer (iVLM), a unified architecture designed to tackle a variety of vision-language tasks, such as image/video captioning and question answering (QA). iVLM simplifies the conventional approaches by utilizing a single image encoder and a single text decoder, thus avoiding complex structures and dependencies on external modules like object detectors and OCR. Instead, this model operates under a sole LLMing task.

Performance and Methodology

The model boasts state-of-the-art results on several benchmarks. For example, iVLM surpasses human performance on TextCaps, achieving a CIDEr score of 138.2 versus the human score of 125.5. This is significant, particularly considering the model's relative simplicity. The model’s architecture is sufficiently robust to cover a diverse range of image and video tasks effectively.

Key improvements in performance metrics were noted across a variety of datasets: For COCO, the CIDEr score reached 148.8, and for VizWiz, it scored 114.4. These results highlight the model's ability to generalize well across different contexts. Furthermore, iVLM can be extended to video captions by encoding multiple sampled frames.

Data and Architecture

iVLM exploits a large-scale pre-training dataset of 0.8 billion image-text pairs, enhancing its ability to comprehend and generate relevant descriptions. The image encoder is derived from a Swin-like vision transformer, pre-trained using contrastive tasks, which helps eliminate the need for additional object detection modules.

The pre-training is performed using a LLMing loss, which offers efficiency advantages over typical Masked LLMing (MLM) approaches. Additionally, iVLM's generative capabilities yield benefits such as predicting image labels directly, demonstrating a novel generation-based image classification approach.

Analysis of Model and Data Scaling

The analysis shows that both increasing model size and scaling up pre-training datasets significantly improve task performance, especially in scene-text-related QA tasks. It also reveals that a strong image encoder, pre-trained with contrastive methods, crucially impacts the overall VL performance.

Implications and Future Directions

This research underscores the efficacy of generative models in unified vision-language tasks, emphasizing the importance of scalable data and model architectures. The results suggest that a simplified model structure can achieve competitive and even superior performance on complex tasks with appropriate scaling.

The paper opens avenues for further exploration in generative models, particularly regarding extending iVLM beyond its current scope to incorporate text-only data, thus enhancing text decoding capabilities. Future work may also explore in-context learning and control over generated outputs, which remains challenging in the current framework.

Conclusion

The iVLM sets a new standard in vision-LLMing by breaking down complex task-specific architectures into a simple yet highly effective generative model. Its impressive performance across a wide range of benchmarks illustrates the potential of scaling both data and model architecture in advancing AI capabilities. As AI research progresses, the methodologies and insights from this work will likely inform future developments in generative models for vision and language tasks.