SVTR: Scene Text Recognition with a Single Visual Model

The paper "SVTR: Scene Text Recognition with a Single Visual Model" presents a novel approach to scene text recognition that diverges from the traditional hybrid architecture, which typically involves a combination of a visual model for feature extraction and a sequence model for text transcription. The authors propose SVTR, a method that relies solely on a visual model, eliminating the need for a sequential modeling component. This is achieved within a patch-wise image tokenization framework, enabling efficient and accurate text recognition.

Methodology

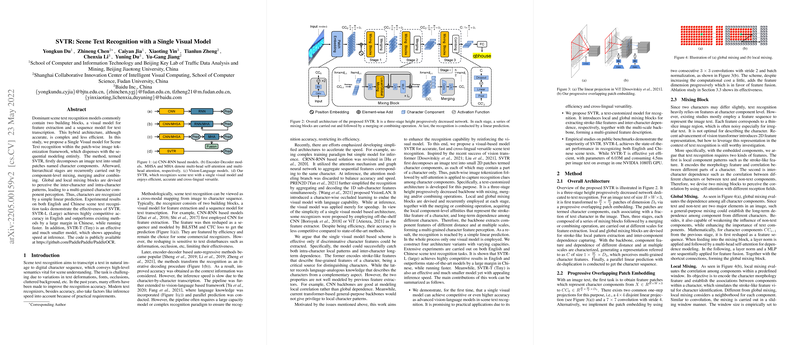

SVTR innovatively decomposes an image text into smaller patches termed character components. This method leverages a series of hierarchical stages where component-level mixing, merging, and combining are applied. The architecture introduces global and local mixing blocks designed to capture inter-character and intra-character patterns effectively. These components lead to a multi-grained character component perception, allowing for character recognition through a straightforward linear prediction.

The SVTR model is structured into three stages with progressively decreasing height, employing a series of mixing blocks followed by merging or combining operations. The mixing blocks employ self-attention to capture both local stroke-like patterns and global character dependencies. This results in the extraction of comprehensive and discriminative character features, which are essential for accurate text recognition.

Numerical Results and Performance

The experimental results indicate that SVTR performs competitively across both English and Chinese scene text recognition tasks. Notably, the SVTR-Large (SVTR-L) model achieves high accuracy in English text recognition and surpasses existing methods by a significant margin in Chinese recognition tasks. Furthermore, it operates faster than many contemporary models, highlighting its efficiency. The SVTR-Tiny (SVTR-T), a compact version, also demonstrates efficient inference speeds with minimal computational resources.

Implications and Future Work

SVTR's approach has several implications, particularly in simplifying the architecture for scene text recognition. By eliminating the need for a sequential model, SVTR reduces complexity and improves inference speed, making it suitable for practical applications. The cross-lingual versatility of SVTR also enhances its applicability across different languages, a feat often challenging for models reliant on complex language-aware components.

From a theoretical standpoint, the work suggests that a single visual model, when properly architected, can achieve results comparable to, or better than, models incorporating language-based components. This could influence future research directions in computer vision and artificial intelligence, emphasizing the exploration of more efficient architectures.

Conclusion

In summary, the paper presents SVTR as an effective scene text recognition approach utilizing a single visual model. Its method of leveraging multi-grained feature extraction demonstrates strong performance in both speed and accuracy. SVTR offers an appealing solution for applications necessitating quick and reliable text recognition across languages, opening potential avenues for further optimization and application-specific adaptations in the field of AI and computer vision.