Exploring Unified Video-Language Pre-training with SimVLT Transformer

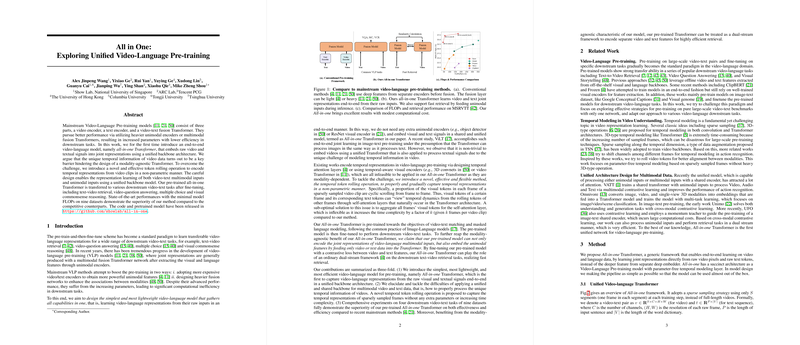

The paper introduces a unified framework for video-language pre-training, focusing on an end-to-end model named SimVLT Transformer. This research addresses the limitations of mainstream Video-Language Pre-training (VLP) models, which typically consist of separate video encoders, text encoders, and multimodal fusion Transformers. These traditional models tend to rely on heavier unimodal encoders or complex fusion mechanisms that increase computational demand and reduce efficiency when transferring to downstream tasks.

Model Architecture and Key Innovations

The SimVLT Transformer represents a paradigm shift towards a single multimodal encoder that processes both video and text inputs. The model's design is predicated on the hypothesis that a unified transformer architecture can effectively learn joint representations from raw multimodal inputs. The primary technical challenge addressed is the encoding of temporal information inherent in video data. Unlike images, videos require handling temporal dynamics that are not trivially accommodated in a modality-agnostic transformer.

To overcome this, the authors propose a novel temporal token rolling mechanism, a non-parametric method that encodes temporal dynamics by rolling a proportion of visual tokens across video frames. This approach allows for effective temporal modeling without a prohibitive increase in parameter count or computational complexity, avoiding the drawbacks of past methods such as temporal attention layers or temporal-aware encoders.

Empirical Evaluation

SimVLT Transformer is comprehensively evaluated across several video-language tasks, including text-video retrieval, video-question answering (VQA), and visual commonsense reasoning, demonstrating state-of-the-art performance with minimal FLOPs on nine datasets. Remarkably, SimVLT achieves these results while using significantly fewer parameters compared to existing VLP models.

The experimental results highlight the efficacy of the temporal token rolling operation, which efficiently manages temporal dependencies with computational costs kept in check. The reduction in model complexity does not compromise accuracy, with SimVLT maintaining competitive—or superior—performance across diverse benchmarks.

Theoretical and Practical Implications

This research has significant implications for the future of VLP models. From a theoretical standpoint, SimVLT challenges the prevailing notion that more complex model architectures are necessary for high performance in multimodal tasks. Instead, the results underscore the potential of more efficient models that leverage innovative data processing techniques to maintain effectiveness.

Practically, SimVLT’s architecture is appealing for applications where computational resources are constrained, or real-time processing is required. Tasks such as large-scale video retrieval systems, interactive video games, or live video processing applications could greatly benefit from this lightweight approach.

Future Directions

The paper opens avenues for further refinement and exploration in video-LLM design. Future research could extend the exploration of token-level interactions and potentially incorporate adaptive token rolling strategies, where the degree and pattern of rolling could be learned from data.

Further efforts to understand and optimize the interaction between temporal encoding and other elements of transformer architecture may yield additional performance improvements. Additionally, exploring the role of unified pre-training architectures in other multimodal domains (e.g., audio-visual tasks) could extend the applicability of these insights beyond video-LLMs.

In summary, the presented work is a robust step towards more efficient and cohesive model architectures in VLP, with the potential to inspire a new generation of models that balance complexity with performance through innovative, resource-conscious techniques. The release of the SimVLT codebase further facilitates such developments, offering the research community a valuable tool for future explorations in unified video-language representation learning.