VLM: Task-agnostic Video-LLM Pre-training for Video Understanding

The paper "VLM: Task-agnostic Video-LLM Pre-training for Video Understanding" presents an innovative approach towards pre-training a video-LLM in a task-agnostic manner. The primary objective of the discussed method is to enhance multimodal video understanding by integrating video and text data within a unified, simplified framework.

Key Contributions

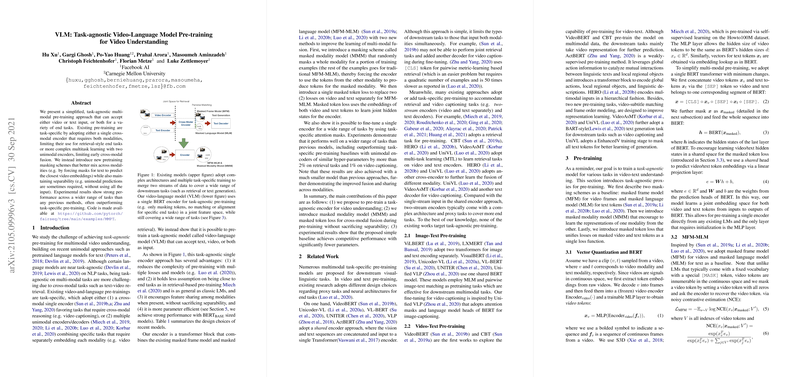

- Task-Agnostic Approach: Unlike existing models that often rely on task-specific pre-training strategies, the proposed VLM model focuses on a task-agnostic setup. The authors introduce a unified approach that allows the model to accept video, text, or both modalities without committing to a specific task during pre-training.

- Innovative Masking Schemes: The paper introduces novel token masking schemes to facilitate better cross-modal interaction and fusion. The focus is on masked modality models (MMM) and a single masked token loss, promoting the learning of shared latent representations for video and text modalities.

- Model Design and Efficiency: The VLM employs a single BERT-based encoder, leading to a reduced model complexity compared to conventional approaches that utilize multiple, often separate, encoders or decoders for different tasks. This design choice underscores both parameter efficiency and increased flexibility across various downstream applications.

Experimental Analysis

The paper's experimental results illustrate the effectiveness of the VLM approach across a range of tasks, often surpassing the performance of task-specific pre-training methods. Key findings include:

- Text-Video Retrieval: Achieving superior retrieval metrics, the VLM model demonstrates the capability of learning robust joint video-text embeddings.

- Action Segmentation and Localization: The model efficiently performs action segmentation, highlighting its ability to capture fine-grained video dynamics without task-specific alignments.

- Video Captioning: Despite the absence of pre-trained decoders, the model performs competitively in generating descriptive video captions.

Implications and Future Directions

The discussion on VLM introduces significant advancements in the domain of multimodal learning, particularly in video understanding. Practically, the task-agnostic pre-training framework paves the way for more generalized systems that can easily adapt and extend to new tasks without the necessity for retraining or extensive fine-tuning. Theoretically, it highlights the potential for further exploration into the integration of diverse modalities within a single architectural framework.

Looking forward, this research can inspire further developments in expanding multimodal learning algorithms to embrace other input formats and domains. Potential exploration might involve state-of-the-art architectures or datasets to refine cross-modal interaction, further advancing the field of AI toward more comprehensive and versatile understanding systems.

The paper's open-source release, with the code available on GitHub, ensures reproducibility and encourages broader community engagement in enhancing and leveraging the proposed methodologies. This openness supports the continued advancement and refinement of video-language understanding technology.

In conclusion, the VLM framework presents a streamlined, effective alternative to complex, task-specific systems, promoting a holistic approach to video-LLM pre-training that holds promise for future AI developments.