Learning vs Retrieval: The Role of In-Context Examples in Regression with LLMs

The paper "Learning vs Retrieval: The Role of In-Context Examples in Regression with LLMs" by Nafar, Venable, and Kordjamshidi provides an empirical evaluation of in-context learning (ICL) in LLMs with a specific focus on regression tasks. The authors effectively address an essential research question about the balance between learning from in-context examples and leveraging internal knowledge retrieval. This work critically challenges existing perspectives and extends the empirical understanding of ICL mechanisms.

Summary of Contributions

- Demonstration of LLMs' Regression Capabilities: The paper demonstrates that LLMs, including GPT3.5, GPT4, and LLaMA 3 70B, can perform effectively on real-world regression tasks. This finding extends previous research that primarily focused on synthetic or less complex datasets, indicating LLMs' practical utility in numerical prediction tasks.

- Proposed ICL Mechanism Hypothesis: The authors propose a nuanced hypothesis suggesting that ICL mechanisms in LLMs lie on a spectrum between pure meta-learning and knowledge retrieval. They argue that the models adjust their behavior based on several factors, including the number and nature of in-context examples and the richness of the information provided.

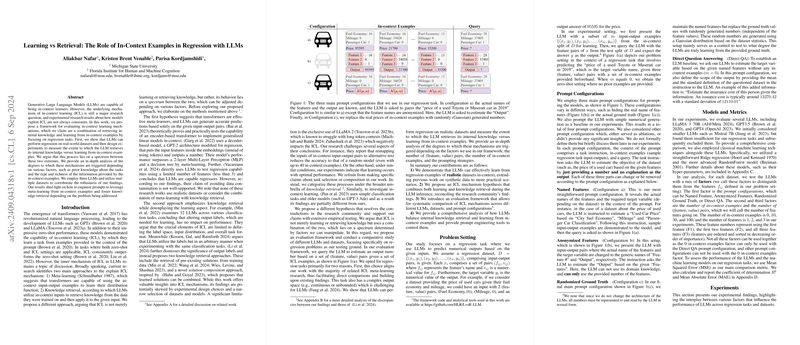

- Evaluation Framework: An evaluation framework is presented to systematically compare ICL mechanisms across different scenarios. This framework employs various configurations of prompts and in-context examples to analyze the extent to which LLMs rely on internal knowledge versus learning from provided examples.

- Empirical Insights into Prompt Engineering: The paper provides significant insights into prompt engineering for optimizing LLM performance. The authors show that the number of in-context examples and the quantity of features play crucial roles in determining the balance between learning and retrieval. Particularly, fewer in-context examples combined with named features yield optimal performance in many scenarios.

Key Findings and Implications

Learning and Knowledge Retrieval Spectrum

The primary assertion of this paper is that LLMs exhibit a spectrum of behaviors between learning from in-context examples and retrieving internal knowledge. This behavior is mediated by the number of in-context examples and the features included in the prompts. The findings contradict studies such as Min et al. (2022) and Li et al. (2024), which downplay the role of learning from in-context examples.

Performance Dependency on Prompt Configurations

Different prompt configurations were analyzed:

- Named Features: Where feature names are explicit, thereby facilitating internal knowledge retrieval.

- Anonymized Features: Where feature names are generic, focusing purely on learning from numerical data.

- Randomized Ground Truth: Incorporating noise to test the robustness of learning.

- Direct QA: Using no in-context examples to assess baseline knowledge retrieval.

The results consistently showed that allowing LLMs to utilize internal knowledge through named features and fewer in-context examples significantly enhanced performance. In contrast, anonymizing features required more examples to achieve similar performance levels. Randomized ground truth settings severely degraded performance, reinforcing the importance of coherent output labels in learning tasks.

Practical Applications and Prompt Engineering

The paper highlights the practical utility of strategically manipulating prompt configurations to achieve optimal performance, echoing previous research's emphasis on the importance of prompt design. By reducing in-context examples and enhancing feature information, practitioners can achieve more data-efficient deployments of LLMs. This ability to fine-tune prompt configurations offers significant potential in domains where both data efficiency and robustness to noise are critical.

Data Contamination and Its Impact

An intriguing aspect explored by the authors is the potential for data contamination, even when feature names are anonymized. The paper raises concerns about the implicit exposure of LLMs to training data, suggesting that this contamination may influence performance beyond the intended retrieval mechanisms. This observation calls for more rigorous methods to disentangle pure learning from inadvertent retrieval of training data, an issue that future research must address.

Future Directions

The findings prompt several avenues for future research:

- Extending evaluations to a broader range of datasets and tasks, particularly those less represented in existing LLM training corpora.

- Integrating interpretability techniques to elucidate the underlying mechanisms of ICL further.

- Investigating the scalability of these findings with more features and longer prompts, overcoming the current limitations of token constraints.

Conclusion

Nafar, Venable, and Kordjamshidi's paper advances the empirical understanding of ICL in LLMs, particularly in regression tasks. By proposing a nuanced spectrum between learning and retrieval, the authors clarify the roles of different prompt factors in enhancing LLM performance. Their work not only challenges existing assumptions but also offers practical guidance for optimizing LLM applications. This paper underscores the importance of strategically designing prompts and understanding the interplay between internal knowledge and in-context learning in the broader endeavor of leveraging LLMs for complex computational tasks.