LongT5: Efficient Text-To-Text Transformer for Long Sequences

The paper under review presents LongT5, a significant advancement in developing efficient text-to-text transformers capable of processing long input sequences. This research builds upon the Text-To-Text Transfer Transformer (T5) architecture, well-regarded for its scalability across a wide range of natural language processing tasks. The paper explores simultaneous scaling of input length and model size, integrating attention mechanisms from long-input transformers and pre-training strategies from models like PEGASUS. The outcome, LongT5, exhibits state-of-the-art performance on various summarization and question-answering datasets.

Core Contributions

LongT5 introduces several key innovations in transformer-based models:

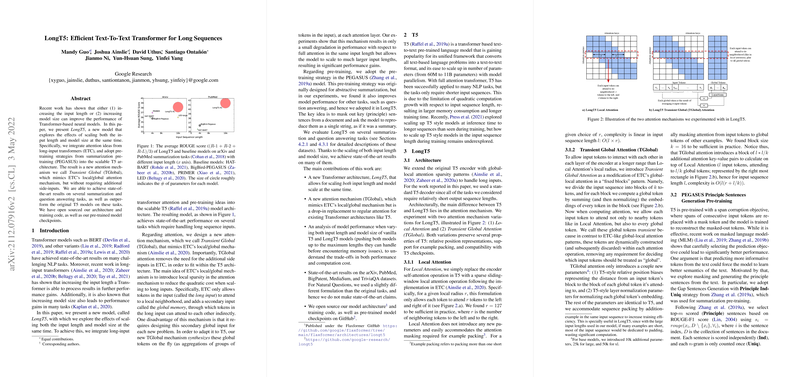

- Transient Global (TGlobal) Attention Mechanism: The paper introduces TGlobal, an attention mechanism inspired by ETC's local/global attention but adapted for the T5 architecture. TGlobal eliminates the need for side-inputs required by ETC, enabling efficient scalability in input length. It achieves this by synthesizing global tokens dynamically, allowing input tokens to attend to precomputed global tokens without quadratic scaling in computational cost.

- Pre-training with PEGASUS Principles: LongT5 employs a pre-training strategy inspired by the PEGASUS model, which masks key sentences from documents, improving the model's performance on tasks like summarization and question answering. This approach capitalizes on generating principle sentences, enhancing the model's capacity for extracting and synthesizing core information.

- Scalability and Performance: The paper provides evidence that LongT5 rivals or outperforms existing models across tasks requiring long sequence processing. It achieves superior results on datasets like arXiv, PubMed, BigPatent, and TriviaQA, among others, showcasing the model's efficacy in handling long documents.

- Open Source Contribution: Making LongT5’s architecture and pre-trained model checkpoints available in open source further emphasizes the paper's commitment to advancing the field, allowing the broader research community to build upon these findings.

Implications and Speculations on Future Developments

The demonstrated improvements in handling long sequences have several implications. By proving the effectiveness of TGlobal attention and the PEGASUS-style pre-training within the T5 framework, this work opens avenues for further exploration into efficient handling of extensive data contexts. Future developments may focus on refining these mechanisms, potentially incorporating additional sparse attention techniques or low-rank approximations to further enhance scalability and efficiency.

Moreover, given the significant role of transformers in ongoing advancements in areas such as machine translation, summarization, and comprehension, models like LongT5 will likely undergo iterative enhancements, improving automated understanding and processing of extensive textual data. As natural language tasks grow in complexity and data volume, the need for more efficient architectures remains crucial, and the adaptability of LongT5 could inspire similar architectural experiments across different domains in artificial intelligence.

This work also serves as a signal for the potential shift toward increasingly general-purpose transformer architectures, capable of excelling across a variety of tasks without extensive task-specific alterations. By continuing to refine models like LongT5, the research community moves closer to achieving robust capabilities for universal language understanding and generation.

In summary, LongT5 marks an important step toward more universally applicable and scalable transformer models. The paper's contributions to attention mechanism design and pre-training methodologies encapsulate significant progress in managing long-form inputs, setting the stage for future innovations in scalable and efficient natural language processing solutions.