An Analysis of "From Dense to Sparse: Contrastive Pruning for Better Pre-trained LLM Compression"

The paper presents a framework known as Contrastive Pruning () to address the challenges associated with compressing Pre-trained LLMs (PLMs) such as BERT. PLMs, despite their success in various NLP tasks, are characterized by substantial parameter counts, leading to significant computational and resource demands. Pruning has been employed as a method to alleviate these burdens by eliminating less important model parameters. However, traditional pruning approaches often focus narrowly on task-specific knowledge, risking the loss of broader, task-agnostic knowledge from the pre-training phase. This loss can result in catastrophic forgetting and reduced generalization ability.

Methodology

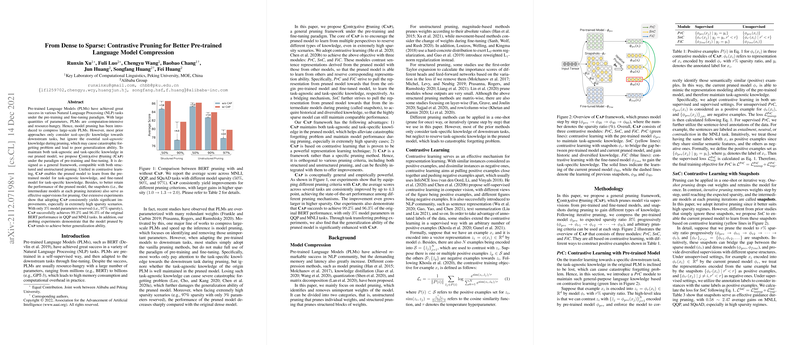

introduces Contrastive Pruning, leveraging contrastive learning to foster the retention of both task-agnostic and task-specific knowledge in the sparsified models. The proposed framework is designed to integrate with both structured and unstructured pruning methods, making it versatile and adaptable.

Key components include:

- PrC (Contrastive Learning with Pre-trained Model): This module emphasizes preserving task-agnostic knowledge by contrasting representations from the original pre-trained model with those from the sparsified model. This helps in maintaining the model's fundamental language understanding capabilities.

- SnC (Contrastive Learning with Snapshots): During the iterative pruning process, intermediate models, or snapshots, are used to bridge the representation gap between the densely pre-trained model and the sparsified model. This integration of historical models assists in maintaining performance consistency, especially under high sparsity.

- FiC (Contrastive Learning with Fine-tuned Model): It enables the pruned model to learn task-specific features by aligning its representations with those from a model fine-tuned on specific downstream tasks.

Experimental Results

The efficacy of is illustrated through extensive experiments on NLP tasks like MNLI, QQP, and SQuAD. For instance, under conditions of extreme sparsity (97%), maintains 99.2% and 96.3% of the performance of the dense BERT model in QQP and MNLI tasks, respectively. This demonstrates the framework's ability to significantly reduce model size while retaining substantial task performance.

Moreover, contrastive pruning consistently improved various pruning techniques, especially as model sparsity increased. This robustness across different pruning strategies suggests that is an effective enhancement to existing methods.

Implications and Future Considerations

The proposed framework has both practical and theoretical implications:

- Practical Implications: The ability to compress PLMs without significantly sacrificing performance has direct benefits in deploying models in resource-constrained environments, such as mobile devices and embedded systems.

- Theoretical Implications: By integrating contrastive learning with pruning, this work offers insights into the preservation of knowledge within neural networks, highlighting the interplay between different stages of neural network lifecycle (pre-training, fine-tuning, pruning).

Future research could explore the application of to even larger models beyond BERT, such as GPT-3, where sparsity management is critical. Additionally, extending this approach to multilingual PLMs could reveal important nuances in language-specific pruning dynamics.

In conclusion, the paper introduces an innovative take on model pruning using a contrastive learning framework that addresses both task-agnostic and task-specific knowledge retention, marking a significant contribution to the field of model compression.