Post-Training Quantization for Vision Transformers: FQ-ViT Insights

The paper at hand introduces FQ-ViT, a novel approach to post-training quantization specifically tailored for Vision Transformers (ViTs). This paper addresses the common challenge of reducing the inference complexity of ViTs, which usually involve substantial computational overhead due to their large parameter size compared to their CNN counterparts. The authors propose two primary techniques: the Power-of-Two Factor (PTF) and Log-Int-Softmax (LIS), which together facilitate effective quantization of LayerNorm inputs and attention maps, respectively.

Key Contributions

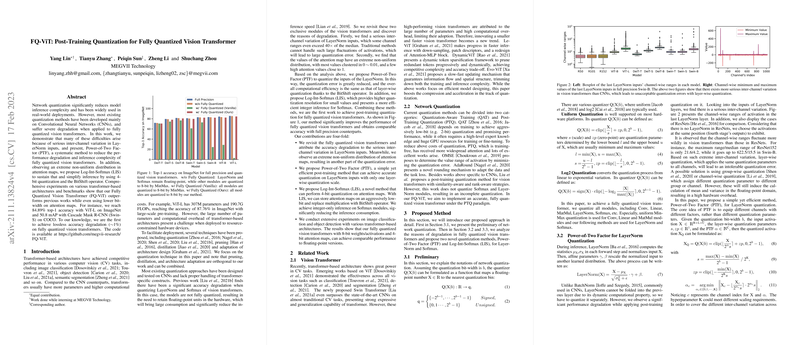

- Revisiting Quantization Obstacles: The research identifies two significant issues in vision transformers quantization: the pronounced inter-channel variation in LayerNorm inputs, and the extreme non-uniform distribution of attention maps. These challenges exacerbate quantization errors that impede deploying ViTs on resource-constrained devices.

- Power-of-Two Factor for LayerNorm: PTF is introduced as a solution to alleviate the issues arising from inter-channel variation. By equipping each channel with distinct power-of-two scaling factors, PTF reduces quantization errors, allowing computations to benefit from integer operations using BitShift, thereby maintaining the efficiency of layer-wise quantization.

- Log-Int-Softmax for Efficient Attention Map Quantization: The paper innovates with LIS to handle softmax quantization. Leveraging a log2 quantization approach and a novel integer-only logarithm technique, LIS allows the deployment of a 4-bit quantization scheme for attention maps, preserving competitive accuracy levels while enabling integer-only inference, significantly impacting inference speed and energy consumption.

- Comprehensive Performance Evaluation: The paper conducts extensive experiments on ViT, DeiT, and Swin Transformer models across benchmarks like ImageNet and COCO. The results indicate minimal accuracy degradation (∼1%) with fully quantized models, outperforming equivalents while reducing hardware overhead via lower bit-width storage and computation.

Theoretical and Practical Implications

Theoretically, this work advances the understanding of practical considerations when quantizing attention mechanisms and normalization layers within transformer architectures. The introduction of PTF and LIS offers a framework that maintains competitive model performance while greatly diminishing computational costs, potentially encouraging further research to explore even more aggressive bit-reduction techniques.

Practically, by achieving near-lossless quantization, FQ-ViT holds promise for industrial applications, particularly those bound by stringent resource constraints such as real-time edge computing and mobile implementations. The integer-only frameworks introduced by PTF and LIS can inspire hardware design optimizations for future accelerator architectures to exploit these efficiency gains.

Future Developments

This paper sparks several avenues for future exploration. The concept of fully quantizing transformer architectures opens up the possibility of integrating these methodologies with other optimization strategies such as model pruning or NAS frameworks to further minimize model footprint. Moreover, investigating the generalization of these techniques to other Transformer architectures, such as those used in NLP tasks, could unveil additional applications and insights.

In conclusion, this paper provides an effective pathway for the quantization of vision transformer models, achieving efficiency without compromising on performance. With the FQ-ViT approach, the deployment of these models in diverse, computation-restricted environments becomes increasingly feasible, paving the way for broader applicability and innovation in the field of AI and computer vision.