Efficient Transformer for High-Resolution Image Restoration: A Formal Analysis of Restormer

The paper "Restormer: Efficient Transformer for High-Resolution Image Restoration" introduces a novel Transformer model designed to address the challenges associated with high-resolution image restoration tasks. This paper proposes several innovations in Transformer architecture, making them both effective and efficient for handling tasks such as image deraining, single-image motion deblurring, defocus deblurring, and image denoising.

Overview of Contributions

Core Innovations

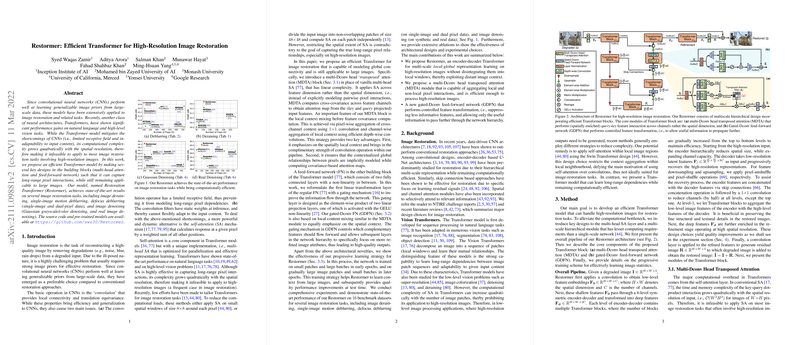

- Multi-Dconv Head Transposed Attention (MDTA): One of the critical contributions of the paper is the design of the MDTA module, an efficient self-attention mechanism. Traditional self-attention mechanisms operate with quadratic complexity concerning the spatial resolution, making them infeasible for high-resolution images. The MDTA addresses this by computing self-attention across the feature channels instead of spatial dimensions, reducing the complexity to linearity.

- Gated-Dconv Feed-Forward Network (GDFN): The GDFN module introduces a gating mechanism to the feed-forward network (FN) components of the Transformer. This component enables controlled feature transformation by allowing essential features to propagate while suppressing less informative ones. By incorporating depth-wise convolutions, the GDFN improves spatial context encoding.

- Progressive Learning Strategy: To enhance the performance for full-resolution test images, a progressive learning strategy is employed. The network is trained on progressively larger image patches through different epochs, facilitating better capture and learning of global image statistics.

Methodological Implications

Efficiency and Performance

The proposed architectural modifications allow Restormer to excel in multiple aspects of image restoration while maintaining computational efficiency. Traditional CNN-based designs are proficient in modeling local image features but struggle with long-range dependencies due to their limited receptive fields. Conversely, conventional Transformers, while effective, are computationally prohibitive for high-resolution tasks. Restormer bridges this gap by offering a Transformer model that can handle high-resolution images without exorbitant computational costs.

Numerical Results

The results demonstrated in this paper are compelling. For image deraining tasks, Restormer improves the state-of-the-art by an average of 1.05 dB across various datasets. In single-image motion deblurring, Restormer outperforms the previous best methods, achieving a PSNR gain of 0.47 dB compared to MIMO-UNet+ and 0.26 dB compared to MPRNet. For defocus deblurring, both single-image and dual-pixel applications, Restormer achieves significant gains, surpassing the recent methods IFAN and Uformer by a notable margin. In Gaussian and real image denoising tasks, Restormer consistently sets new benchmarks, particularly achieving PSNR values above 40 dB on the SIDD dataset—a first for any model in this domain.

Theoretical Implications

Scalability of Transformer Models

The utilization of MDTA underscores a key realization about the scalability of Transformer models in the imaging domain. By rethinking attention computation to operate on channel dimensions, Restormer provides a blueprint for future models looking to leverage the strengths of Transformers while mitigating their shortcomings in computational scaling.

Enhancements in Feature Transformation

The GDFN's gating mechanism represents a significant step forward in dynamic feature processing within neural networks. Traditional FNs apply transformations uniformly across features, but the gating approach in GDFN introduces a capability for the network to prioritize and enhance relevant features dynamically, yielding superior image restoration results.

Practical Implications

The robust performance across diverse image restoration tasks implies that Restormer can be employed in a variety of real-world applications – from professional photography and video editing to medical imaging and remote sensing. The efficiency and performance balance achieved also suggest that Restormer can be integrated into mobile and web-based applications where computational resources are limited.

Speculations on Future Developments

Given the promising results, future developments could explore more elaborate gating mechanisms or hybrid models that combine the strengths of both convolution and self-attention more intricately. Another avenue could be extending the progressive learning approach, potentially incorporating adaptive learning strategies that dynamically adjust patch sizes and learning rates based on intermediate performance metrics.

Conclusion

Restormer presents a notable advancement in the application of Transformers for high-resolution image restoration tasks. By introducing MDTA and GDFN, as well as employing progressive learning, the model achieves state-of-the-art results across multiple domains. This research opens up new possibilities for efficient, high-performance Transformers in image processing, suggesting several exciting future research directions.