Transparency of Deep Neural Networks for Medical Image Analysis

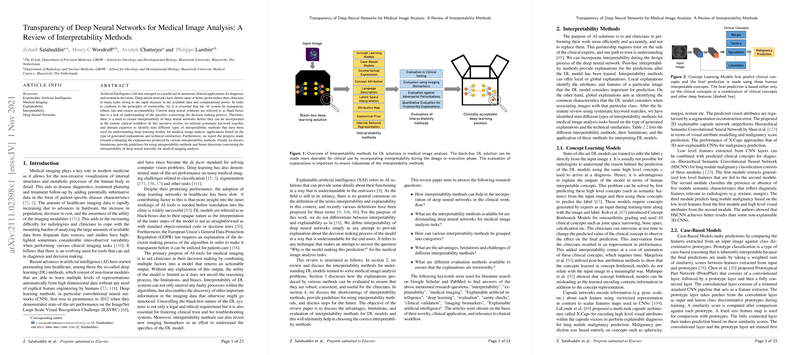

The paper "Transparency of Deep Neural Networks for Medical Image Analysis: A Review of Interpretability Methods" by Salahuddin et al. offers a critical overview of interpretability methods in deep learning (DL) models specifically applied to medical image analysis. As deep neural networks demonstrate promising capabilities in medical imaging, the need for transparency in these models becomes paramount. The paper meticulously categorizes existing interpretability techniques into nine distinct methods, each with their respective advantages and limitations, offering insights for further research and application.

Interpretability Methods

The paper identifies and analyzes nine interpretability techniques, grouped based on explanation type and technical similarities:

- Concept Learning Models: These models utilize human-interpretable concepts in diagnosis, involving additional annotation costs. They demonstrate the potential for improved performance when clinicians intervene with learned concepts.

- Case-Based Models: Relying on class discriminative prototypes, these models inherently provide interpretability but struggle with training complexity and robustness against noise.

- Counterfactual Explanation: By perturbing images to produce opposite classifications, this method reveals significant decision-impacting changes, albeit the challenge of generating realistic perturbations.

- Concept Attribution: This approach evaluates the influence of semantic features on predictions, yet faces challenges in reproducibility and concept annotation.

- Language Description: Offering textual justifications for model predictions, this method's utility often depends on the availability of structured reports.

- Latent Space Interpretation: Focused on visualizing compressed data representations, these methods encounter difficulties in retaining high-dimensional details.

- Attribution Map: Simplifies model decision regions to highlight them in input images but often lacks specificity in how these contribute to predictions.

- Anatomical Prior: Integrates structural information into network design, enhancing interpretability yet limiting applicability to generalized problems.

- Internal Network Representation: Insight into features identified by CNN filters, though it generally provides less clarity on medical images due to complex patterns.

Evaluation Methods and Challenges

Evaluating interpretability in medical imaging demands rigorous approaches due to its intricate clinical nature. The paper emphasizes various evaluation criteria:

- Clinical Evaluation: Application-grounded tests, including expert assessments, are vital to ensure explanations are practical and non-biased.

- Imaging Biomarkers: Correlating model explanations with known biomarkers helps validate clinical relevance, promoting trust in DL applications.

- Adversarial Perturbations: Testing methods against adversarial perturbations ensures robustness against input challenges, safeguarding reliability.

- Quantitative Metrics: Methods like AOPC and TCAV provide a framework for quantitatively assessing explanation validity, critical for achieving trustworthy interpretability.

Theoretical and Practical Implications

The review highlights the importance of interpretability for fulfilling ethical, legal, and practical requirements in clinical settings. As DL models advance in medical domains, fostering trust through transparent decision-making becomes essential. The identification of potentially new imaging biomarkers via interpretability methods offers substantial clinical value, necessitating further exploration and cross-validation with multi-modal data. The inherent trade-off between performance and interpretability also demands attention, guiding future research and development efforts to balance these aspects optimally.

Conclusion

The analyzed methods and their evaluations illustrate the evolving landscape of interpretability in medical imaging DL models. The paper calls for integrating interpretability at the model design stage rather than relying solely on post-hoc explanations. As methodologies mature, employing multi-modal data and human-in-the-loop evaluations will likely enhance both model performance and interpretability, paving the path for trustworthy and clinically deployable AI solutions.