An Expert Review of BitFit: Simple Parameter-efficient Fine-tuning for Transformer-based Masked LLMs

"BitFit: Simple Parameter-efficient Fine-tuning for Transformer-based Masked LLMs" by Ben-Zaken et al. proposes a sparsity-driven method to fine-tune large pre-trained BERT-like LLMs. The rapid and extensive use of transformer-based models, despite their excellent performance in a range of NLP tasks, comes with heavy computational and memory costs when fine-tuning. The paper introduces BitFit, a method that modifies only the bias terms of a model rather than optimizing all the parameters, thus retaining task performance while reducing the computational footprint.

Key Contributions

The primary contribution lies in demonstrating that fine-tuning exclusively the bias terms of the model, or even a subset of them, can achieve competitive, and in some cases superior, performance compared to traditional fine-tuning methods. Through a series of rigorous experiments, the paper suggests that this bias-only adjustment primarily serves to "expose" existing knowledge in pre-trained models rather than to learn new task-specific information.

Methodology

BitFit operates under several principles designed to address the limitations of full-model fine-tuning:

- Parameter Efficiency: The method involves tuning only the bias terms of the model, significantly trimming down the number of trainable parameters. For instance, in BERT\textsubscript{BASE} and BERT\textsubscript{LARGE}, the bias parameters represent a mere 0.09% and 0.08% of the total model’s parameters, respectively.

- Task Invariance: The same set of parameters (the bias terms) are adjusted irrespective of the task, enhancing the deployability of the approach in memory-constrained environments.

- Localized Changes: The adjustments are isolated to specific parts of the parameter space, ensuring that the changes don’t propagate widely and hence require fewer computational resources.

The paper also explores further fine-tuning reductions by considering only the bias terms associated with the query parts of the self-attention mechanisms and the second MLP layer within the transformer architecture.

Experimental Results

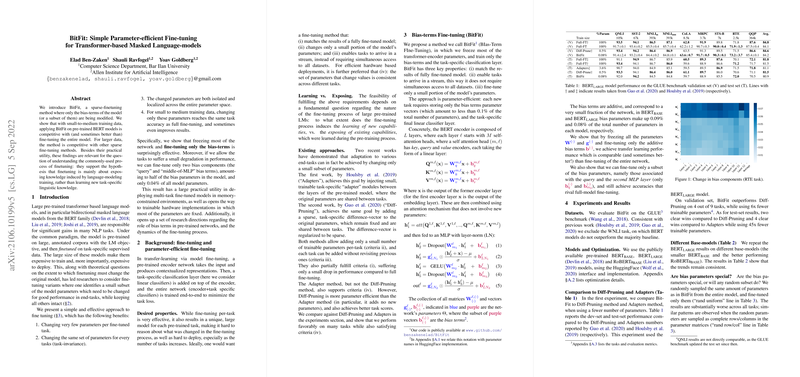

The efficacy of BitFit was validated across several tasks in the GLUE benchmark, including QNLI, SST-2, MNLI, and others. Validation set results revealed that BitFit outperformed the Diff-Pruning method on 4 out of 9 tasks while requiring significantly fewer trainable parameters. On test sets, BitFit also exhibited notable wins over both Diff-Pruning and Adapter methods.

- Performance Metrics: Notably, the validation results illustrated marginal differences between full fine-tuning and BitFit, indicating similar levels of performance in accuracy and correlation metrics, such as a 91.4% mean accuracy on QNLI for BitFit compared to 91.7% by full fine-tuning.

Theoretical Implications

The success of BitFit raises important theoretical questions about the underlying dynamics of fine-tuning in large models. Specifically, the observation that adjusting bias terms alone can achieve high performance lends credence to the hypothesis that fine-tuning pre-trained models is more about exposing pre-learned knowledge rather than acquiring new task-specific features. Moreover, the distinct efficiency of bias terms in this context calls for further investigations into their roles within neural network architectures.

Practical Implications and Future Directions

From a practical standpoint, the BitFit method holds significant potential for enhancing the deployability of multi-task models in constrained environments, such as edge devices or specialized hardware implementations. Since only a tiny fraction of the model's parameters need to be altered, models can be fine-tuned on-the-fly without heavy computational costs or significant memory overheads.

Future Work: There are three primary pathways for future research that this work illuminates:

- Exploration in Other Models: Applying and validating the BitFit methodology on other transformer-based architectures like GPT-3 or T5 might yield further insights.

- Analyzing Bias Parameters: A more in-depth analysis of why bias parameters play such a crucial role and whether other inherent properties of the model can similarly streamline fine-tuning efforts.

- Scalability and Robustness: Investigating how BitFit can be extended to larger datasets and more complex tasks or integrated with other sparse fine-tuning techniques for enhanced performance.

Conclusion

Ben-Zaken et al.'s research on BitFit presents a significant stride towards more efficient and effective fine-tuning methodologies in NLP. By focusing on bias-term fine-tuning, the approach not only matches the performance of full fine-tuning in most scenarios but also opens the door to the deployment of robust models on resource-constrained devices. The findings also challenge the existing paradigms of fine-tuning, calling for a reevaluation of how pre-trained models are adapted to new tasks.