Insights into Adversarial Robustness and Flat Minima in Neural Networks

The paper titled "Relating Adversarially Robust Generalization to Flat Minima" presents a comprehensive analysis of the relationship between adversarial robustness and the flatness of loss landscapes in neural networks. The authors seek to address the challenge of robust overfitting in adversarial training (AT), where the robust performance on test examples deteriorates while it continues to improve on training examples.

Key Contributions

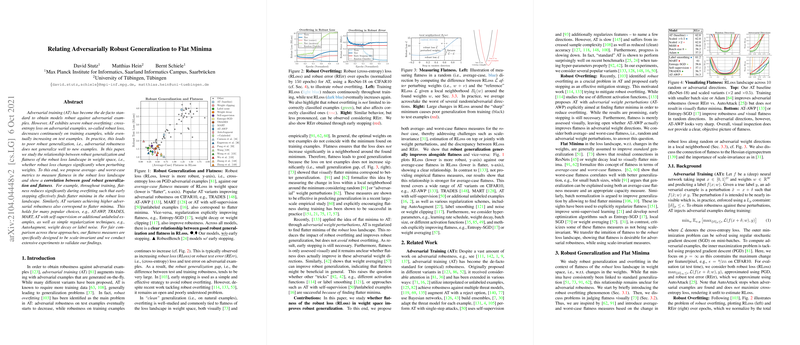

- Evaluation of Robust Overfitting: The paper begins by detailing the issue inherent in adversarial training known as robust overfitting. Despite improvements on training examples, test performance often degrades significantly past certain training epochs, attributed to increasing sharpness in the loss landscape.

- Flatness as a Generalization Quality Marker: The core thesis is that the smoothness or flatness of the robust loss landscape correlates strongly with better robust generalization. The paper emphasizes both average- and worst-case flatness metrics to objectively quantify this flatness.

- Scale-Invariant Flatness Measures: In addressing the challenge of measuring flatness objectively, the authors propose scale-invariant metrics, crucial for fair comparisons across different models. This scale invariance is achieved through relative layer-wise perturbations—a method ensuring measurements are not distorted by differing parameter scales.

- Correlation Between Flatness and Robustness: Empirical results demonstrate a strong correlation between average-case flatness in the adversarial loss landscape and robust generalization, indicating that flatter minima are generally more robust. This relationship persists across a variety of adversarial training variants and regularization strategies, including TRADES, AT-AWP, and self-supervised learning techniques.

- Role of Early Stopping: The results validate the effectiveness of early stopping in identifying flatter and more robust solutions in the training landscape. By halting training at a specific point, networks tend to generalize better, minimizing the disparity between training and test losses.

Practical Implications

These findings align with the thread of research pointing towards the exploration of flat minima as a criterion for enhancing model robustness. The implications are profound for the deployment of neural networks in critical applications, where the cost of misclassification due to adversarial examples can be high.

- Optimizing Training Protocols: Understanding the role of flatness can guide the design of more effective adversarial training protocols and regularization techniques, potentially mitigating the often-encountered trade-off between clean accuracy and robustness.

- Influence on Network Architectures: This research may inform the development of novel architectures inherently biased towards flat loss landscapes, providing robust features intrinsically rather than through exhaustive data augmentation.

Theoretical and Future Questions

Theoretical insights might focus on examining why and how flat minima contribute to adversarial robustness. This understanding may lead to advances in non-uniform architectures that exploit energy-efficient training dynamics without sacrificing robustness.

Looking forward, further exploration could delve into the dynamics between robustness and architecture scaling, incorporating advances in network sparsity and compression. There is also scope for extending this work into other domains of machine learning beyond image classifications, such as time-series analysis and natural language processing, to verify the general applicability of the flatness hypothesis across diverse data modalities.

In conclusion, this paper adds valuable insights into the characterization of adversarial training through the lens of flatness, providing both empirical data and theoretical constructs to guide future advancements in robust AI systems.