Few-Shot Text Generation with Natural Language Instructions: A Critical Overview

The paper "Few-Shot Text Generation with Natural Language Instructions" by Timo Schick and Hinrich Schütze presents an innovative method, genPet, designed to enhance the data efficiency of text generation tasks using large pretrained LLMs. It primarily focuses on addressing challenges in adapting pretrained models for tasks such as summarization and headline generation with limited data availability, known as few-shot learning settings.

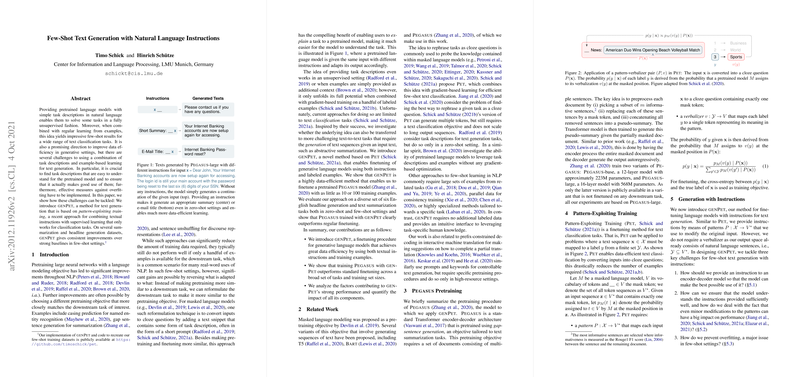

The authors introduce genPet (Pattern-Exploiting Training for Generation), which extends upon the existing Pet framework that only applied to classification tasks. This paper evaluates the potential of combining natural language instructions with a handful of examples to improve the generative capabilities of models like Pegasus, a generative model known for its capacity to handle summarization tasks effectively.

Key Contributions and Methodologies

- Pattern and Decoder Prefix Integration: The paper demonstrates that providing models with task-specific instructions can significantly leverage their generative capabilities. genPet uses patterns (P) and decoder prefixes (d) to encode task instruction into the input structure, effectively guiding the model on what kind of generation is expected. This method contrasts with simply finetuning models using tiny datasets, which can lead to overfitting or ineffective outputs.

- Handling Instruction Comprehension: Recognizing the variability in model performance based on instruction comprehension, genPet employs a multi-pattern approach. By training the model on several patterns simultaneously and subsequently distilling this knowledge into a single model, genPet mitigates performance variance due to instruction quality.

- Preventing Overfitting: Two novel strategies are introduced to reduce overfitting—a major challenge in few-shot settings. First, the unsupervised scoring method assesses sequence likelihood based on a generic pretrained model, ensuring that generated sequences are realistic and diverse. Second, joint training across different patterns acts as a regularizing force, enhancing the robustness of genPet.

Empirical Validation

The authors validate their approach on multiple datasets, including AESLC, Gigaword, and XSum, demonstrating that genPet consistently outperforms the standard finetuning approaches of Pegasus in few-shot settings. Notably, genPet achieves higher Rouge scores compared to conventional approaches across various tasks and settings. This performance gap illustrates not just the efficacy of incorporating natural language instructions but also highlights the increased data efficiency achieved through genPet.

Implications and Future Directions

The paper sheds light on the pivotal role instructions can play in shaping the output of generative models. The insights gleaned here could propel further research into adaptive instruction-based model training, particularly for scenarios where data scarcity is a substantial bottleneck. Moreover, genPet's methodology of using multiple instructions and joint training could inspire new frameworks in domain adaptation and transfer learning.

One potential future research avenue involves refining the pattern selection process, possibly through automated means, to further enhance instruction quality and task adaptability. Additionally, exploring the integration of genPet with other pretraining and finetuning frameworks could uncover synergistic benefits, advancing real-world applications of few-shot text generation.

In conclusion, Schick and Schütze's work represents a substantial step forward in few-shot learning, demonstrating that the efficient use of task instructions can significantly optimize the performance of generative LLMs. Their proposed genPet framework holds promise not only for improving headline generation and summarization tasks but also for broadening the horizons of what can be achieved with minimal annotated data.