Exploiting Cloze Questions for Few Shot Text Classification and Natural Language Inference

The paper "Exploiting Cloze Questions for Few Shot Text Classification and Natural Language Inference" by Timo Schick and Hinrich Schütze introduces Pattern-Exploiting Training (Pet) and its iterative variant (iPet). These methods address the challenge of performance degradation in NLP tasks when limited labeled data is available, a common issue in few-shot learning contexts.

Key Contributions

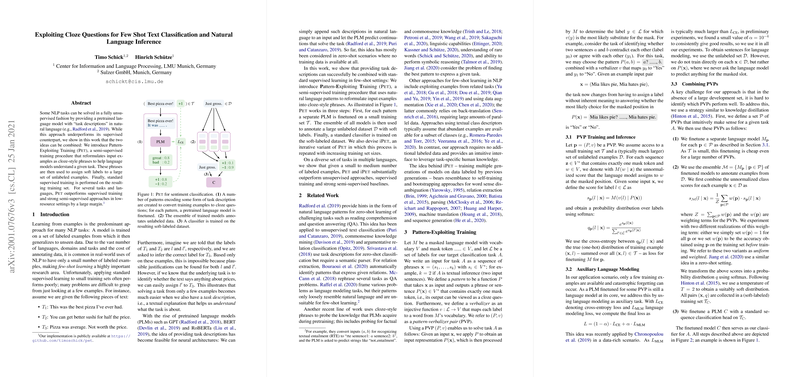

Pet leverages pretrained LLMs (PLMs) by combining task-specific supervised learning with natural language patterns that reformulate input texts into cloze-style questions. This approach aids the models in understanding the tasks through soft-label assignments to unlabeled examples and subsequent standard supervised training. The paper presents a detailed description of Pet, including:

- Pattern-Verbalizer Pairs (PVPs): Pet uses PVPs wherein a pattern takes input sequences and reformulates them into cloze-style questions, and a verbalizer maps task labels to words in the PLM’s vocabulary. This allows the model to predict labels based on the most likely completion of the cloze question.

- Training Pipeline: Pet executes three major steps:

- Pattern Fine-tuning: Each pattern is used to fine-tune a separate instance of the PLM on a small labeled set .

- Soft Labeling: The ensemble of fine-tuned models assigns soft labels to a large unlabeled dataset .

- Classifier Training: A final classifier is trained on this soft-labeled dataset.

- iPet: An iterative extension of Pet, iPet, grows the labeled dataset gradually by repeatedly finetuning models on increasingly large training sets that are soft-labeled by previous generations of models.

Experimental Results

The paper evaluates Pet on several NLP tasks including sentiment analysis, news classification, QA classification, and NLI across datasets such as Yelp Reviews, AG’s News, Yahoo Questions, and MNLI. The models used are RoBERTa (large) and XLM-R for multilingual capabilities. Significant findings include:

- Few-Shot Scenarios: Pet significantly outperforms standard supervised training in few-shot scenarios, especially evident when fewer than 100 examples per label are available. For instance, on Yelp with , Pet achieves an accuracy of 52.9 compared to the baseline’s 21.1.

- Iterative Gains: iPet further boosts performance over Pet by iteratively refining the labeled dataset. Notably, in zero-shot learning scenarios, iPet shows substantial improvements over unsupervised approaches.

- Cross-lingual Applicability: Applying Pet to x-stance, a multilingual stance detection dataset, demonstrates its robustness across languages, leading to considerable performance enhancements in both in-domain and cross-lingual settings.

Implications and Future Work

Practical implications of this research are extensive:

- Optimized Resource Utilization: Pet's ability to capitalize on limited labeled data presents significant cost-saving opportunities, particularly in domains where data annotation is expensive.

- Consistent Performance: The iterative nature of iPet ensures that models continuously improve, making it suitable for dynamic or evolving datasets.

Theoretically, this work underscores the importance of integrating human-like task understanding through cloze-style reformulations, offering insights into hybrid methodologies that blend pattern recognition with deep learning.

Future developments may include:

- Automated Pattern and Verbalizer Discovery: Facilitating automatic identification of effective patterns and verbalizers to minimize manual efforts.

- Expanding Multilingual Support: Further exploration into transferring the framework to a wider array of languages, particularly those with less pretraining resources.

- Enhanced Model Interpretability: Investigating how task descriptions and pattern-based reformulations can lead to more interpretable NLP models.

Overall, the methodologies showcased by Schick and Schütze signify a noteworthy advancement in semi-supervised learning, making substantive contributions to the efficiency and effectiveness of low-resource NLP applications.