Fighting an Infodemic: COVID-19 Fake News Dataset

The paper "Fighting an Infodemic: COVID-19 Fake News Dataset" addresses a significant challenge posed by the rampant spread of misinformation on social media during the COVID-19 pandemic. The authors tackle the issue by presenting a manually curated dataset of social media posts and articles that dichotomize content as either real or fake news related to COVID-19. Through this initiative, the researchers aim to facilitate the advancement of fake news detection methodologies, specifically in the context of the ongoing "infodemic."

Dataset Composition and Development

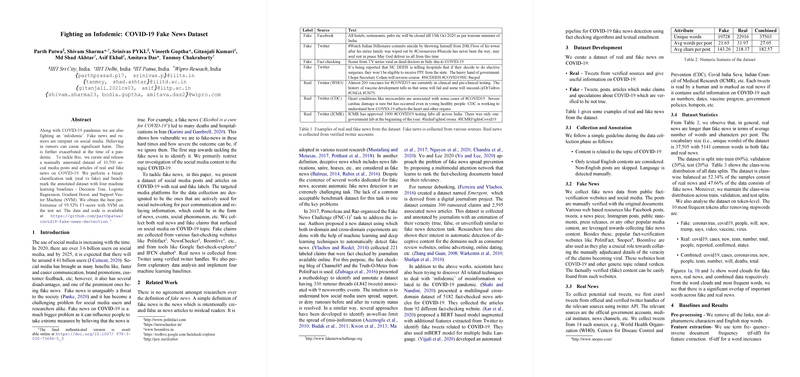

The dataset comprises 10,700 instances of social media content, with a deliberate focus on topical relevance to COVID-19. The foundation of the dataset rests on annotated examples, distinguished into two categories:

- Real News: Verified information sourced from credible and authoritative entities, often originating from verified Twitter accounts of organizations like the World Health Organization (WHO) and the Centers for Disease Control and Prevention (CDC).

- Fake News: Claims and narratives identified as misleading or false, which were cross-validated against reputable fact-checking platforms such as Politifact and Snopes.

To ensure quality and reliability, the dataset was curated under specific guidelines, limiting the content to textual, English-language posts pertinent to COVID-19. The structural analysis revealed that real news items tend to be more verbose than fake news, underscoring differences in linguistic characteristics between genuine and deceptive narratives.

Methodology and Baseline Evaluations

The authors of this paper employed a set of classical machine learning algorithms as baseline models for the binary classification task of discriminating between real and fake news. The models tested included Decision Trees (DT), Logistic Regression (LR), Support Vector Machines (SVM), and Gradient Boost (GDBT).

The benchmark results highlighted SVM as the most effective model, achieving an F1-score of 93.32% on the test set, thereby outperforming the other models, notably Logistic Regression with an F1-score of 91.96%. The confusion matrices indicated balanced performance across the categorized labels, demonstrating the dataset's suitability for robust binary classification.

Implications and Future Directions

The release of this dataset has several important implications:

- Practical Application: The dataset serves as a valuable resource for the development of automated tools and algorithms aimed at identifying and mitigating fake news, particularly in health-related crises where misinformation can have severe societal impacts.

- Benchmarking Standard: By providing benchmark results, the paper establishes a baseline for future studies that seek to improve upon current methodologies with more advanced or novel approaches in machine learning and natural language processing.

- Cross-Domain Utility: While focused on COVID-19, the framework used to create this dataset can be adapted to other contexts of misinformation, enabling broader applications in combating fake news beyond the pandemic.

The authors highlight potential areas for future exploration, such as the incorporation of deep learning models, which may leverage the contextual intricacies of language more effectively than traditional machine learning techniques. Additionally, expanding the dataset to include multilingual and cross-cultural data could enhance the robustness of fake news detection systems globally.

Overall, this paper presents a rigorously developed dataset that underpins the scientific community’s efforts to devise systematic solutions for the pervasive issue of misinformation during crises.