AutoPrompt: Eliciting Knowledge from LLMs with Automatically Generated Prompts

Overview

The paper "AutoPrompt: Eliciting Knowledge from LLMs with Automatically Generated Prompts" discusses a novel approach for creating prompts automatically to evaluate the knowledge contained within pretrained LLMs (LMs), particularly masked LLMs (MLMs) such as BERT and RoBERTa. This automated method addresses the limitations of manually crafted prompts, which are labor-intensive and often less effective.

Key Contributions and Methods

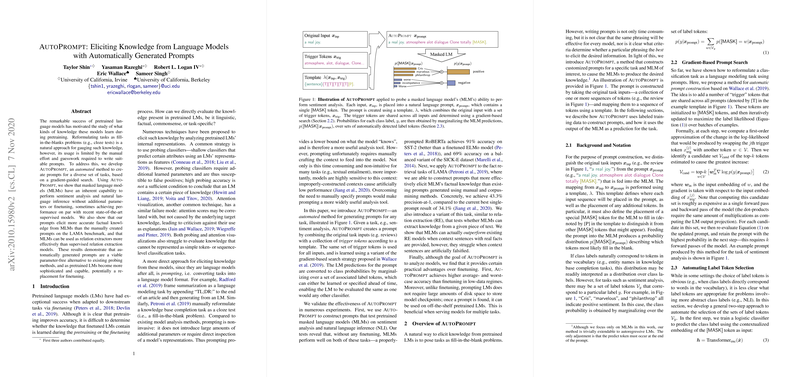

The authors present AutoPrompt, leveraging a gradient-guided search mechanism to identify optimal trigger tokens that, when combined with task input and a masked token, create effective prompts for the target MLM. The method reformulates traditional classification tasks into cloze-style tasks, enabling the elicitation of knowledge without additional training or adjustments to the model parameters.

Gradient-Guided Prompt Search

The core mechanism of AutoPrompt focuses on iteratively refining the prompts by evaluating the gradient of the MLM's output likelihood with respect to the input trigger tokens. The triggers are initially set to masked tokens, and through a gradient-based search, AutoPrompt identifies token replacements that maximize the target label probability.

Label Tokens

For tasks with abstract labels, AutoPrompt includes a two-step method to identify label tokens. First, a logistic regression model is trained using the MLM's masked token representations to predict the class label. Then, the top-k tokens correlated with the predicted labels are chosen as label tokens, enhancing the prompt's effectiveness.

Experimental Evaluation

The efficacy of AutoPrompt was validated across several tasks:

- Sentiment Analysis: The authors tested AutoPrompt on the SST-2 dataset, comparing the method against traditional fine-tuned models and linear probes. The results demonstrated that RoBERTa, with AutoPrompt-generated prompts, achieved up to 91.4% accuracy, outperforming manual prompts and rivaling state-of-the-art fine-tuned models.

- Natural Language Inference (NLI): Using the SICK-E dataset, AutoPrompt showed significant improvement over majority baselines and linear probes without finetuning. The results suggest that MLMs possess inherent NLI capabilities that can be unveiled through properly constructed prompts.

- Fact Retrieval: In the LAMA benchmark, AutoPrompt significantly outperformed both the manually created and LPAQA-mined prompts, achieving a precision-at-1 of 43.34% compared to 34.10% by LPAQA on the original test set.

- Relation Extraction: Evaluating the ability of MLMs to extract relations from context sentences, AutoPrompt led to superior performance compared to supervised relation extraction (RE) models. However, perturbation tests revealed that a portion of the results stemmed from the model's prior knowledge rather than the context, indicating reliance on background information.

Discussion

The paper underscores the latent knowledge present in pretrained MLMs, which can be activated through well-constructed prompts. The authors highlight that AutoPrompt can be advantageous in low-data regimes, where finetuning large models is impractical. The method also offers a storage-efficient solution for deploying models across numerous tasks, as only prompts need to be stored instead of multiple fine-tuned model checkpoints.

Implications for Future Research

AutoPrompt opens up intriguing avenues for future research. The paper's findings prompt further examination of prompt-based probing’s limits, such as exploring tasks like QQP and RTE, where initial tests showed limited success. There's also the potential to refine AutoPrompt's search method to handle highly imbalanced datasets better and improve interpretability. Integrating these automatic prompts with autoregressive models like GPT-3 could further enhance broader applications.

Conclusion

The research presented in this paper represents a pivotal step in better understanding and utilizing the knowledge embedded within pretrained LLMs. AutoPrompt proves to be an effective tool for both analyzing and leveraging these models across diverse NLP tasks without extensive model-specific finetuning. The method reinforces the premise that as LMs become increasingly sophisticated, automated prompt generation could emerge as a potent alternative to conventional supervised learning approaches.