- The paper reveals that last-layer activations collapse toward class means, initiating the Neural Collapse phenomenon during extended training.

- It employs both extensive experiments and theoretical analysis to demonstrate the emergence of a Simplex Equiangular Tight Frame for optimal discrimination.

- The study indicates that this geometric alignment enhances network generalization and robustness while informing improved training methodologies.

Overview of Neural Collapse in Deep Learning Training

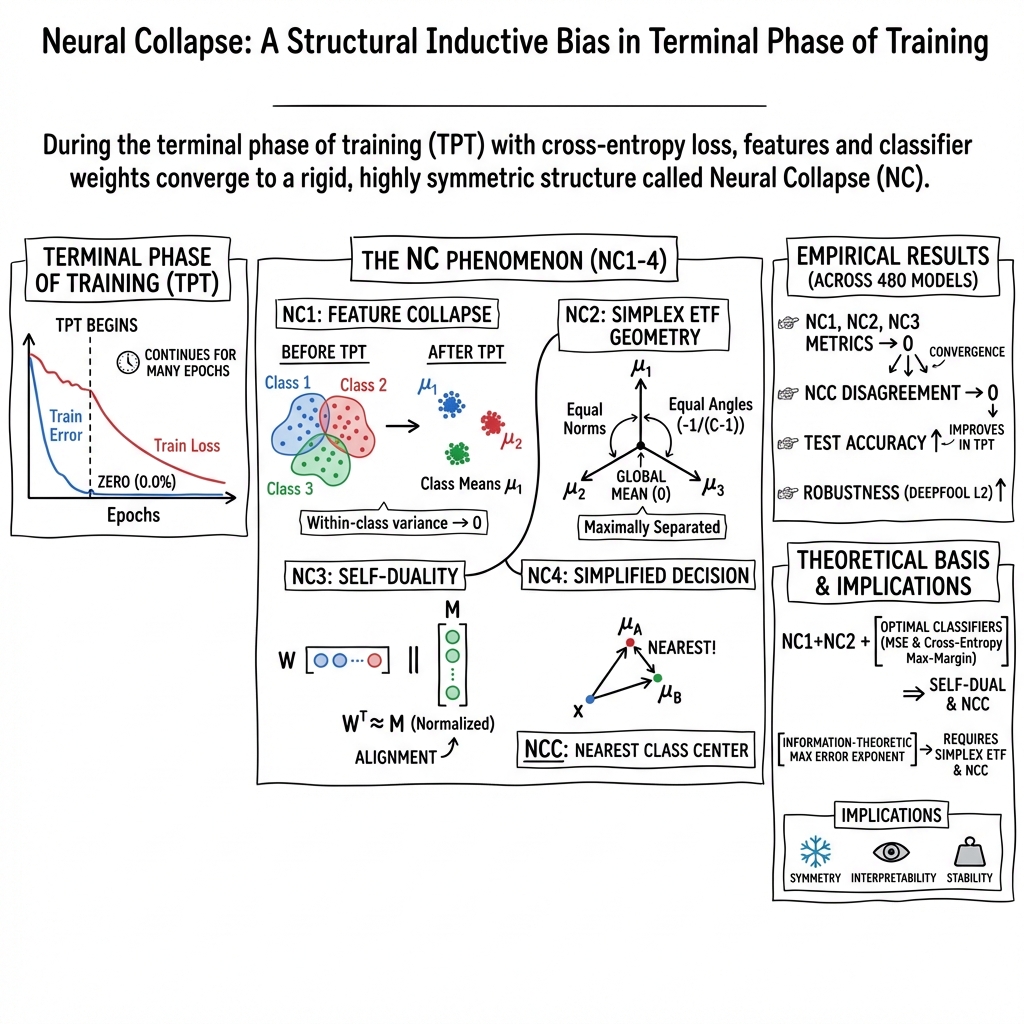

The paper "Prevalence of Neural Collapse during the terminal phase of deep learning training" by Papyan, Han, and Donoho addresses a critical insight into the training dynamics of modern deep networks, especially during their Terminal Phase of Training (TPT). This work investigates the phenomenon termed "Neural Collapse," where specific geometric alignments in the network's last layer emerge as the network is trained to drive the cross-entropy loss towards zero.

Key Findings

The research reveals four interconnected aspects of Neural Collapse:

- Variability Collapse (NC1): The within-class variability of last-layer activations diminishes, leading to activations collapsing to their class means.

- Convergence to Simplex Equiangular Tight Frame (NC2): Class-means progressively align to form a Simplex ETF, characterized by equinorms and maximal equiangularity, achieving a configuration optimal for discrimination.

- Self-Duality (NC3): The last-layer classifiers converge to the class-means, essentially reflecting these means up to a scaling factor, which implies a symmetry and simplicity in the resulting classifier configuration.

- Nearest Class-Center (NC4): The decision making of the classifier simplifies, defaulting to a nearest class center rule in Euclidean space.

Theoretical and Empirical Results

The authors conduct extensive experiments across canonical datasets and architectures, confirming these phenomena are pervasive. They align this empirical evidence with a strong theoretical underpinning, suggesting that these geometric structures emerge naturally from the dynamics of deep network training. Their theoretical analysis employs large deviations theory, showing that the optimal structure for minimizing classification error corresponds to the Simplex ETF configuration.

Implications for AI and Deep Learning

The implications of this work are substantial:

- Generalization and Robustness: The geometric simplicity induced by Neural Collapse enhances the deepnet's generalization ability and robustness against adversarial attacks. This aligns with the broader goals of achieving reliable AI systems.

- Training Methodologies: The findings provide insights into the effects of over-parameterization and prolonged training phases (TPT), suggesting these practices may implicitly guide networks towards optimal geometric structures.

- Tools for Analysis: By providing a clearer geometric interpretation of deep networks, researchers can more effectively analyze and potentially predict network behavior across various tasks and configurations.

Future Directions

Looking forward, the research opens multiple avenues:

- Dynamics Exploration: Further studies could explore how various network architectures and datasets affect the speed and nature of Neural Collapse.

- Advanced Theories: The alignment with probabilistic and information-theoretical frameworks could further refine models of neural network behavior.

- Practical Applications: Leveraging Neural Collapse could inspire new design principles for network architecture and training regimes that emphasize geometric simplicity and stability.

This paper provides a rigorous analysis into the structural behaviors of deep networks, reaffirming the importance of geometric and statistical methods in understanding and advancing the capabilities of artificial intelligence systems.