- The paper introduces the Slot Attention module to iteratively refine random slot representations into object-centric features using a softmax-based attention mechanism.

- It integrates with CNN backbones for unsupervised and set prediction tasks, achieving competitive metrics such as ARI on datasets like CLEVR and Multi-dSprites.

- The approach outperforms existing methods in efficiency and accuracy, paving the way for advanced visual reasoning and multi-object analysis applications.

Object-Centric Learning with Slot Attention

Introduction

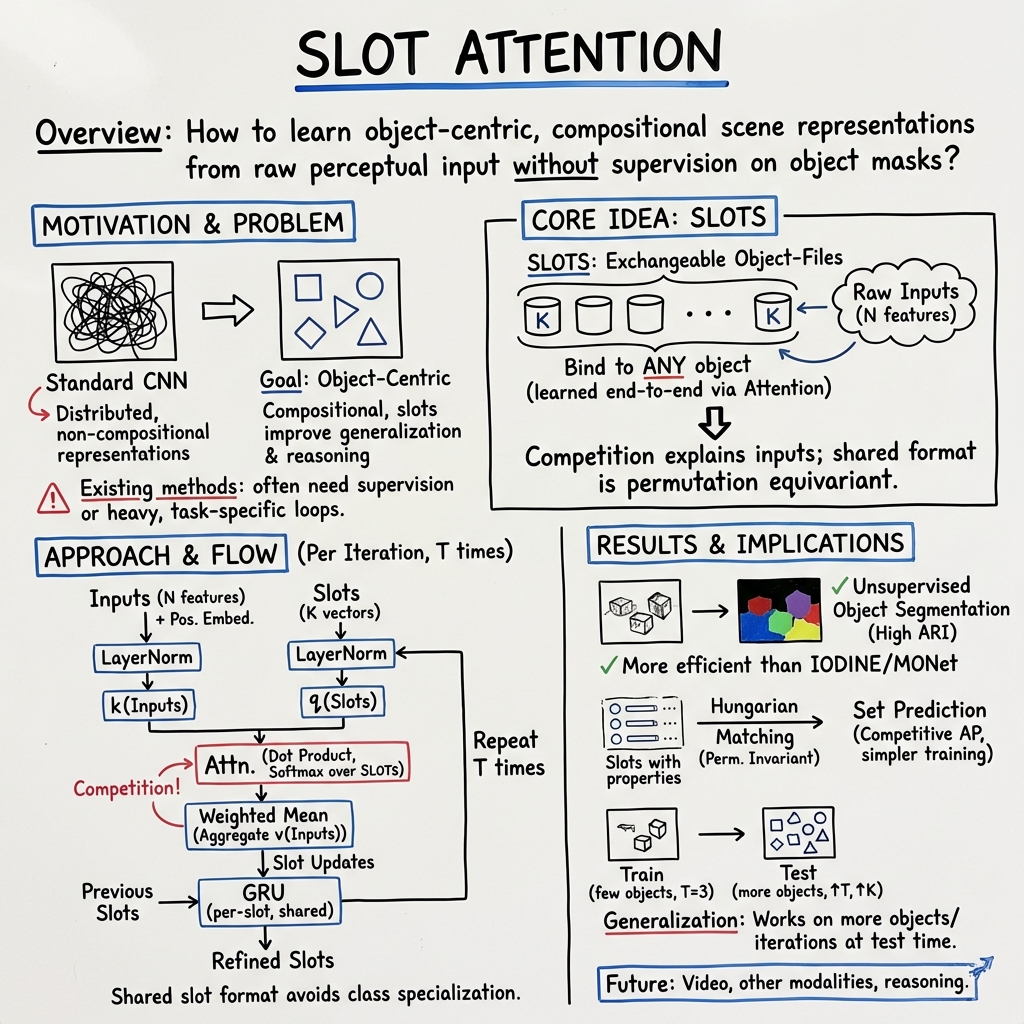

The paper "Object-Centric Learning with Slot Attention" introduces the Slot Attention module, an architectural component designed to learn object-centric representations from perceptual inputs. The Slot Attention module interfaces with the output of a convolutional neural network (CNN) and produces a set of abstract representations, referred to as slots, in a task-dependent manner. These slots are interchangeable and can bind to any object within the input through a competitive round of iterative attention. It offers a novel approach that addresses the challenge faced by many deep learning models, which often fail to correctly capture the compositional properties of natural scenes.

Slot Attention Module

The Slot Attention mechanism leverages an iterative procedure where slots compete for explaining parts of the input features via a softmax-based attention mechanism. The slots are initialized with random values and refined at each iteration to bind to specific parts of the input. Compared to other mechanisms like Capsule Networks or traditional clustering algorithms, Slot Attention modules enable the extraction of abstract, object-centric representations that can generalize to unseen compositions and more slots.

The algorithm follows these key steps during each iteration:

- Inputs and slots are layer normalized.

- Attention scores are computed via a dot-product mechanism and normalized across slots.

- Feature updates are aggregated using a weighted mean over attention scores.

- Slots are updated through a Gated Recurrent Unit (GRU).

- An optional multilayer perceptron (MLP) is applied with layer normalization.

Methods and Architectural Integration

The Slot Attention module integrates well into unsupervised object discovery and set prediction tasks. In unsupervised object discovery, the model uses an autoencoder structure where the encoder employs a CNN backbone followed by the Slot Attention module, and each slot is decoded separately. Set prediction tasks involve predicting properties of objects within a scene without assuming an explicit order, and the randomized slot values provide this necessary permutation invariance.

Object Discovery Evaluation

Several experiments were conducted to evaluate the efficacy of Slot Attention in unsupervised object discovery:

- The model was tested on CLEVR (with masks), Multi-dSprites, and Tetrominoes datasets, showcasing its ability to decompose scenes into individual objects.

- The performance was measured using the Adjusted Rand Index (ARI) metric.

- Results showed that Slot Attention matches or outperforms state-of-the-art methods like IODINE and MONet while being more memory efficient and significantly faster to train.

Set Prediction Evaluation

The Slot Attention module was also evaluated in supervised object property prediction tasks using the CLEVR dataset:

- It demonstrated competitive performance against Deep Set Prediction Networks (DSPN), the primary baseline, with favorable results across various average precision (AP) metrics.

- The model efficiently handled variations in object quantities during training and testing phases, highlighting its generalizability.

Implications and Future Work

Slot Attention's ability to produce interpretable and object-centric representations from low-level inputs marks a significant capability for applications requiring visual reasoning or multi-object understanding. Future work could extend Slot Attention to other modalities beyond vision, such as text and speech data, or explore its application in dynamic contexts like video analysis. Additionally, integrating Slot Attention with other advanced learning objectives, including contrastive or reinforcement learning, poses an interesting avenue for developing more robust machine learning models capable of complex reasoning and interaction.

Conclusion

Slot Attention presents a versatile and efficient solution for learning object-centric representations in machine learning. Through a principled iterative attention framework, it provides strong quantitative and qualitative results in both unsupervised and supervised tasks. This approach paves the way for numerous potential applications across different domains and lays the groundwork for future exploration in object-centric learning.